[ Optimization repository ] [ GCC ] [ LLVM ] [ MILEPOST optimization predictor ]

[ Andrew Ng's ML course ] [ Google ML crash course ] [ Facebook Field Guide to ML ]

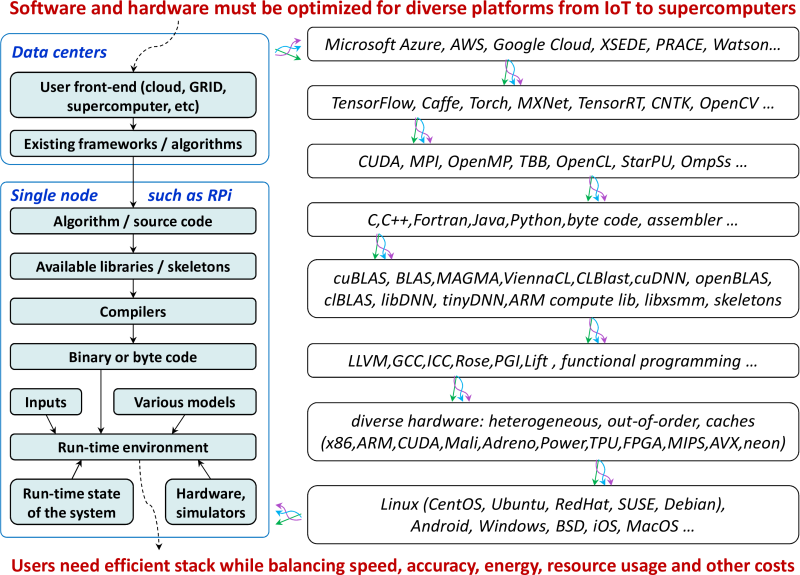

Developing efficient software and hardware has never been harder whether it is for a tiny IoT device or an Exascale supercomputer. Apart from the ever growing design and optimization complexity, there exist even more fundamental problems such as lack of interdisciplinary knowledge required for effective software/hardware co-design, and a growing technology transfer gap between academia and industry.

We introduce our new educational initiative to tackle these problems by developing Collective Knowledge (CK), a unified experimental framework for computer systems research and development. We use CK to teach the community how to make their research artifacts and experimental workflows portable, reproducible, customizable and reusable while enabling sustainable R&D and facilitating technology transfer. We also demonstrate how to redesign multi-objective autotuning and machine learning as a portable and extensible CK workflow. Such workflows enable researchers to experiment with different applications, data sets and tools; crowdsource experimentation across diverse platforms; share experimental results, models, visualizations; gradually expose more design and optimization choices using a simple JSON API; and ultimately build upon each other's findings.

As the first practical step, we have implemented customizable compiler autotuning, crowdsourced optimization of diverse workloads across Raspberry Pi 3 devices, reduced the execution time and code size by up to 40%, and applied machine learning to predict optimizations. We hope such approach will help teach students how to build upon each others' work to enable efficient and self-optimizing software/hardware/model stack for emerging workloads.

1 Introduction

Many recent international roadmaps for computer systems research

appeal to reinvent computing [1, 2, 3].

Indeed, developing, benchmarking, optimizing and co-designing hardware and software

has never been harder, no matter if it is for embedded and IoT devices,

or data centers and Exascale supercomputers.

This is caused by both physical limitations of existing technologies

and an unmanageable complexity of continuously changing computer systems

which already have too many design and optimization choices and objectives

to consider at all software and hardware levels [4],

as conceptually shown in Figure 1.

That is why most of these roadmaps now agree with our vision

that such problems should be solved in a close collaboration

between industry, academia and end-users [5, 6].

However, after we initiated artifact evaluation (AE) [7, 8] at several premier ACM and IEEE conferences to reproduce and validate experimental results from published papers, we noticed an even more fundamental problem: a growing technology transfer gap between academic research and industrial development. After evaluating more than 100 artifacts from the leading computer systems conferences in the past 4 years, we noticed that only a small fraction of research artifacts could be easily customized, ported to other environments and hardware, reused, and built upon. We have grown to believe that is this due to a lack of a common workflow framework that could simplify implementation and sharing of artifacts and workflows as portable, customizable and reusable components with some common API and meta information vital for open science [9].

At the same time, companies are always under pressure and rarely have time to dig into numerous academic artifacts shared as CSV/Excel files and ``black box'' VM and Docker images, or adapt numerous ad-hoc scripts to realistic and ever changing workloads, software and hardware. That is why promising techniques may remain in academia for decades while just being incrementally improved, put on the shelf when leading students graduate, and ``reinvented'' from time to time.

Autotuning is one such example: this very popular technique has been actively researched since the 1990s to automatically explore large optimization spaces and improve efficiency of computer systems [10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33]. Every year, dozens of autotuning papers get published to optimize some components of computer systems, improve and speed up exploration and co-design strategies, and enable run-time adaptation. Yet, when trying to make autotuning practical (in particular, by applying machine learning) we faced numerous challenges with integrating such published techniques into real, complex and continuously evolving software and hardware stack [5, 4, 6, 9].

Eventually, these problems motivated us to develop a common experimental framework and methodology similar to physics and other natural sciences to collaboratively improve autotuning and other techniques. As part of this educational initiative, we implemented an extensible, portable and technology-agnostic workflow for autotuning using the open-source Collective Knowledge framework (CK) [34, 35]. Such workflows help researchers to reuse already shared applications, kernels, data sets and tools, or add their own ones using a common JSON API and meta-description [36]. Moreover, such workflows can automatically adapt compilation and execution to a given environment on a given device using integrated cross-platform package manager.

Our approach takes advantage of a powerful and holistic top-down methodology successfully used in physics and other sciences when learning complex systems. The key idea is to let novice researchers first master simple compiler flag autotuning scenarios while learning interdisciplinary techniques including machine learning and statistical analysis. Researchers can then gradually increase complexity to enable automatic and collaborative co-design of the whole SW/HW stack by exposing more design and optimization choices, multiple optimization objectives (execution time, code size, power consumption, memory usage, platform cost, accuracy, etc.), crowdsource autotuning across diverse devices provided by volunteers similar to SETI@home [37], continuously exchange and discuss optimization results, and eventually build upon each other's results.

We use our approach to optimize diverse kernels and real workloads such as

zlib in terms of speed and code size by crowdsourcing compiler flag autotuning

across Raspberry Pi3 devices using the default GCC 4.9.2 and the latest GCC

7.1.0 compilers.

We have been able to achieve up to 50% reductions in code size and from 15%

to 8 times speed ups across different workloads over the ``-O3'' baseline.

Our CK workflow and all related artifacts are available at GitHub

to allow researchers to compare and improve various exploration strategies

(particularly based on machine learning algorithms such as KNN, GA, SVM,

deep learning, though further documentation of APIs is still

required) [24, 6].

We have also shared all experimental results in our open repository of

optimization knowledge [38, 39] to be

validated and reproduced by the community.

We hope that our approach will serve as a practical foundation for open, reproducible and sustainable computer systems research by connecting students, scientists, end-users, hardware designers and software developers to learn together how to co-design the next generation of efficient and self-optimizing computer systems, particularly via reproducible competitions such as ReQuEST [40].

This technical report is organized as follows. Section 2 introduces the Collective Knowledge framework (CK) and the concept of sharing artifacts as portable, customizable and reusable components. Section 3 describes how to implement a customizable, multi-dimensional and multi-objective autotuning as a CK workflow. Section 4 shows how to optimize compiler flags using our universal CK autotuner. Section 5 presents a snapshot of the latest optimization results from collaborative tuning of GCC flags for numerous shared workloads across Raspberry Pi3 devices. Section 6 shows optimization results of zlib and other realistic workloads for GCC 4.9.2 and GCC 7.1.0 across Raspberry Pi3 devices. Section 7 describes how implement and crowdsource fuzzing of compilers and systems for various bugs using our customizable CK autotuning workflow. Section 8 shows how to predict optimizations via CK for previously unseen programs using machine learning. Section 9 demonstrates how to select and autotune models and features to improve optimization predictions while reducing complexity. Section 10 shows how to enable efficient, input-aware and adaptive libraries and programs via CK. Section 11 presents CK as an open platform to support reproducible and Pareto-efficient co-design competitions of the whole software/hardware/model stack for emerging workloads such as deep learning and quantum computing. We present future work in Section 12. We also included Artifact Appendix to allow students try our framework, participate in collaborative autotuning, gradually document APIs and improve experimental workflows.

2 Converting ad-hoc artifacts to portable and reusable components with JSON API

Artifact sharing and reproducible experimentation are key

for our collaborative approach to machine-learning based optimization

and co-design of computer systems, which was first prototyped

during the EU-funded MILEPOST project [5, 41, 24].

Indeed, it is difficult, if not impossible, and time consuming to build useful predictive models

without large and diverse training sets (programs, data sets),

and without crowdsourcing design and optimization space exploration

across diverse hardware [9, 6].

While we have been actively promoting artifact sharing for the past 10 years since the MILEPOST project [5, 42], it is still relatively rare in the community systems community. We have begun to understand possible reasons for that through our Artifact Evaluation initiative [7, 8] at PPoPP, CGO, PACT, SuperComputing and other leading ACM and IEEE conferences which has attracted over a hundred of artifacts in the past few years.

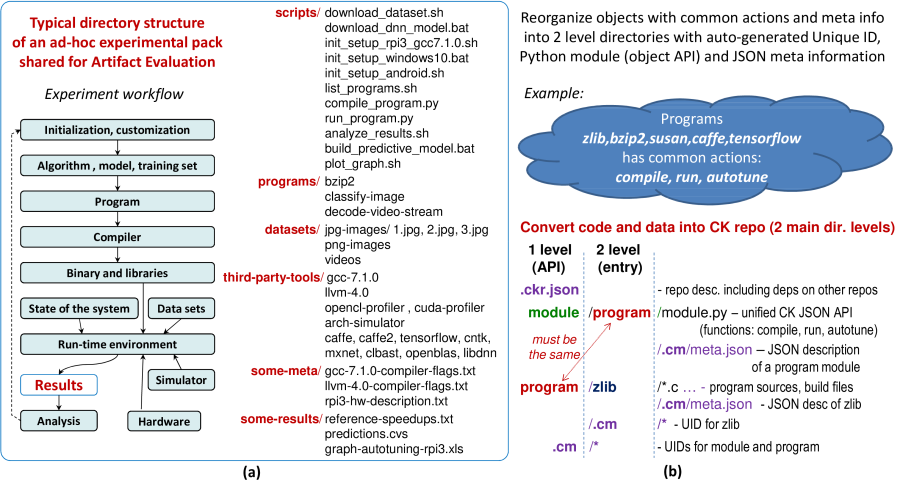

Unfortunately, nearly all the artifacts have been shared simply as zip archives, GitHub/GitLab/Bitbucket repositories, or VM/Docker images, with many ad-hoc scripts to prepare, run and visualize experiments, as shown in Figure 2a. While a good step towards reproducibility, such ad-hoc artifacts are hard to reuse and customize as they do not provide a common API and meta information.

Some popular and useful services such as Zenodo [43] and FigShare [44] allow researchers to upload individual artifacts to specific websites while assigning DOI [45] and providing some meta information. This helps the community to discover the artifacts, but does not necessarily make them easy to reuse.

After an ACM workshop on reproducible research methodologies (TRUST'14) [46] and a Dagstuhl Perspective workshop on Artifact Evaluation [8], we concluded that compute systems research lacked a common experimental framework in contrast with other sciences [47].

Together with our fellow researchers, we also assembled the following wish-list for such a framework:

●

●

●

●

●

●

●

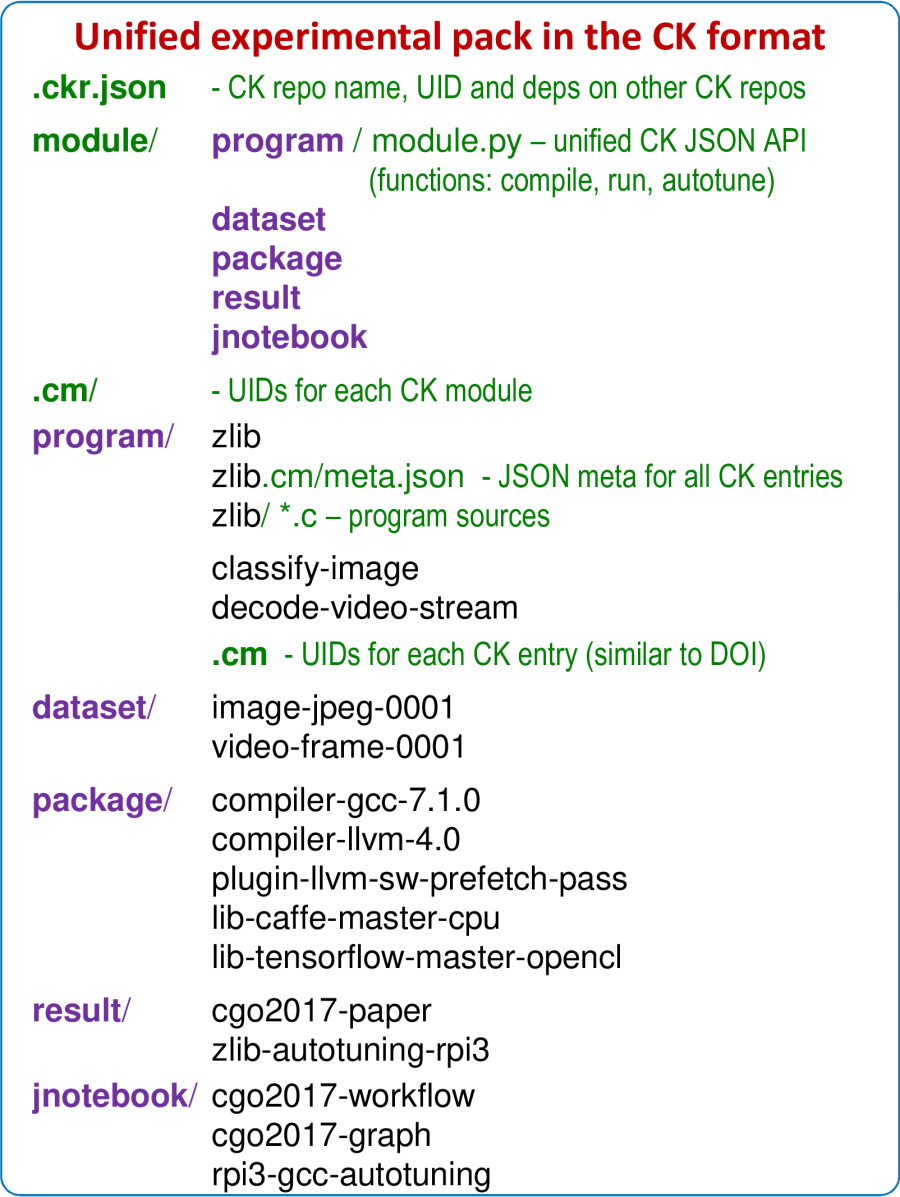

Since there was no available open-source framework with all these features, we decided to develop such a framework, Collective Knowledge (CK) [34, 35], from scratch with initial support from the EU-funded TETRACOM project [50]. CK is implemented as a small and portable Python module with a command line front-end to assist users in converting their local objects (code and data) into searchable, reusable and shareable directory entries with user-friendly aliases and auto-generated Unique ID, JSON API and JSON meta information [36], as described in [35, 51] and conceptually shown in Figure 2b.

The user first creates a new local CK repository as follows:

$ ck add repo:new-ck-repo

Initially, it is just an empty directory:

$ ck find repo:new-ck-repo

$ ls `ck find repo:new-ck-repo`

Now, the user starts adding research artifacts as CK components with extensible APIs. For example, after noticing that we always perform 3 common actions on all our benchmarks during our experiments, "compile", "run" and "autotune", we want to provide a common API for these actions and benchmarks, rather than writing ad-hoc scripts. The user can provide such an API with actions by adding a new CK module to a CK repository as follows:

$ ck add new-ck-repo:module:programCK will then create two levels of directories module and program in the new-ck-repo and will add a dummy module.py where common object actions can be implemented later. CK will also create a sub-directory .cm (collective meta) with an automatically generated Unique ID of this module and various pre-defined descriptions in JSON format (date and time of module creation, author, license, etc) to document provenance of the CK artifacts.

Users can now create holders (directories) for such objects sharing common CK module and an API as follows:

$ ck add new-ck-repo:program:new-benchmarkCK will again create two levels of directories: the first one specifying used CK module (program) and the second one with alias new-benchmark to keep objects. CK will also create three files in an internal .cm directory:

●

●

●

Users can then find a path to a newly created object holder (CK entry) using the ck find program:new-benchmark command and then copy all files and sub-directories related to the given object using standard OS shell commands.

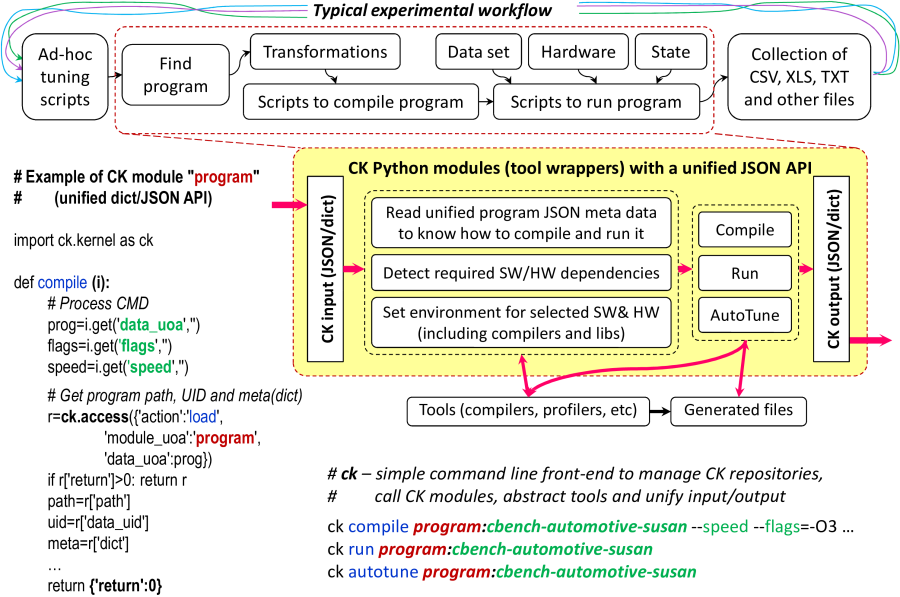

This allows to get rid of ad-hoc scripts by implementing actions inside reusable CK Python modules as shown in Figure 3. For example, the user can add an action to a given module such as compile program as follows:

$ ck add_action module:program --func=compileCK will create a dummy function body with an input dictionary i inside module.py in the CK module:program entry. Whenever this function is invoked via CK using the following format:

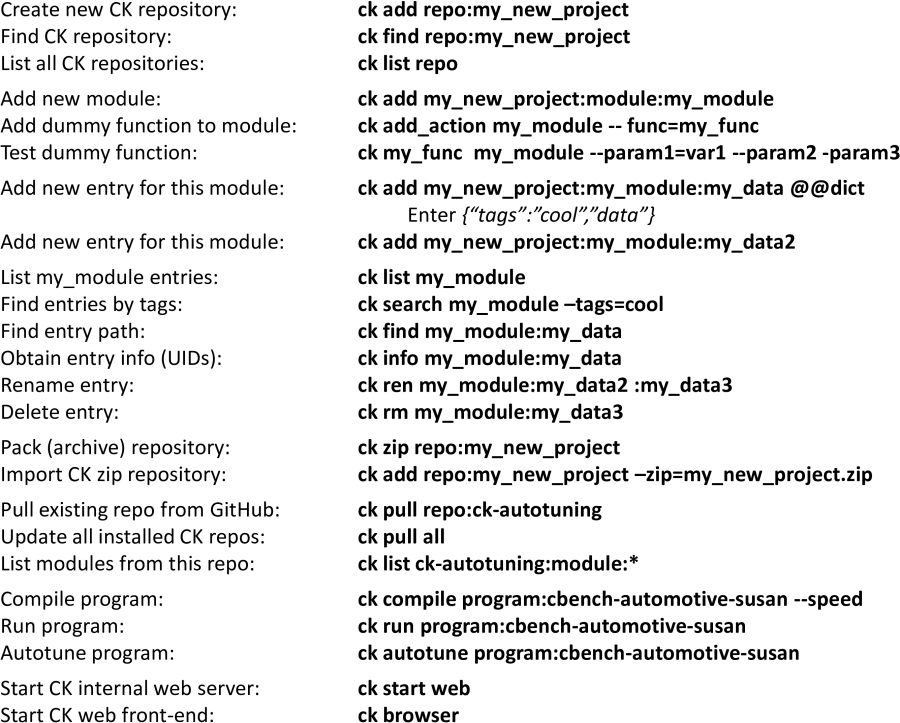

$ ck compile program:some_entry --param1=val1the command line will be converted to i dictionary and printed to the console to help novice users understand the CK API. The user can now substitute this dummy function with a specific action on a specific entry (some program in our example based on its meta information) as conceptually shown in Figure 3. The above example shows how to call CK functions from Python modules rather than from the command line using the ck.access function. It also demonstrates how to find a path to a given program entry, load its meta information and unique ID. For the reader's convenience, Figure 4 lists several important CK commands.

This functionality should be enough to start implementing unified compilation and execution of shared programs. For example, the program module can read instructions about how to compile and run a given program from the JSON meta data of related entries, prepare and execute portable sub-scripts, collect various statistics, and embed them to the output dictionary in a unified way. This can be also gradually extended to include extra tools into compilation and execution workflow such as code instrumentation and profiling.

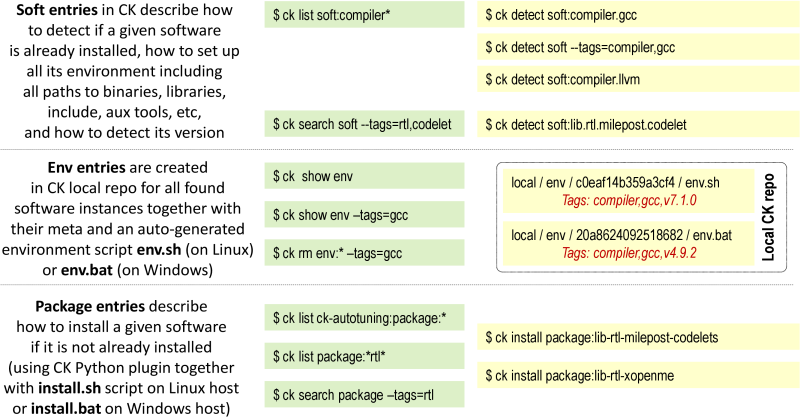

Here we immediately face another problem common for computer systems research: how to support multiple versions of various and continuously evolving tools and libraries? However, since we no longer hardwire calls to specific tools directly in scripts but invoke them from higher-level CK modules, we can detect all required tools and set up their environment before execution. To support this concept even better, we have developed a cross-platform package manager as a ck-env repository [52] with several CK modules including soft, env, package, os and platform. These modules allow the community to describe various operating systems (Linux, Windows, MacOS, Android); detect platform features (ck detect platform); detect multiple-versions of already installed software (ck detect soft:compiler.gcc); prepare CK entries with their environments for a given OS and platform using env module (ck show env) thus allowing easy co-existence of multiple versions of a given tool; install missing software using package modules; describe software dependencies using simple tags in a program meta description (such as compiler,gcc or lib,caffe), and ask the user to select an appropriate version during program compilation when multiple software versions are registered in the CK as shown in Figure 5.

Such approach extends the concept of package managers including Spack [53] and EasyBuild [54] by integrating them directly with experimental CK workflows while using unified CK API, supporting any OS and platform, and allowing the community to gradually extend existing detection or installation procedures via CK Python scripts and CK meta data.

Note that this CK approach encourages reuse of all such existing CK modules from shared CK repositories rather then writing numerous ad-hoc scripts. It should indeed be possible to substitute most of ad-hoc scripts from public research projects (Figure 2) with just a few above modules and entries (Figure 6), and then collaboratively extend them, thus dramatically improving research productivity. For this reason, we keep track of all publicly shared modules and their repositories in this wiki page. The user will just need to add/update a .ckr.json file in the root directory of a given CK repository to describe a dependency on other existing CK repositories with required modules or entries. Since it is possible to uniquely reference any CK entry by two Unique IDs (module UID:object UID), we also plan to develop a simple web service to automatically index and discover all modules similar to DOI.

The open, file-based format of CK repositories allows researchers to continue editing entries and their meta directly using their favourite editors. It also simplifies exchange of these entries using Git repositories, zip archives, Docker images and any other popular tool. At the same time, schema-free and human readable Python dictionaries and JSON files helps users to collaboratively extend actions, API and meta information while keeping backward compatibility. Such approach should let the community to gradually and collaboratively convert and cross-link all existing ad-hoc code and data into unified components with extensible API and meta information. This, in turn, allows users organize their own research while reusing existing artifacts, building upon them, improving them and continuously contributing back to Collective Knowledge similar to Wikipedia.

We also noticed that CK can help students reduce preparation time for Artifact Evaluation [7] at conferences while automating preparation and validation of experiments since all artifacts, workflows and repositories are immediatelly ready to be shared, ported and plugged in to research workflows.

For example, the highest ranked artifact from the CGO'17 article [55] was implemented and shared using the CK framework [56]. That is why CK is now used and publicly extended by leading companies [35], universities [55] and organizations [57] to encourage, support and simplify technology transfer between academia and industry.

3 Assembling portable and customizable autotuning workflow

Autotuning combined with various run-time adaptation,

genetic and machine learning techniques is a popular approach

in computer systems research to automatically explore multi-dimensional

design and optimization spaces [10, 58, 59, 60, 11, 12, 13, 61, 14, 62, 15, 16, 17, 18, 19, 63, 20, 64, 65, 66, 67, 68, 69, 70, 29, 71, 72, 73].

CK allows to unify such techniques by developing a common, universal, portable, customizable, multi-dimensional and multi-objective autotuning workflow as a CK module (pipeline from the public ck-autotuning repository with the autotune function). This allows us to abstract autotuning by decoupling it from the autotuned objects such as "program". Users just need to provide a compatible function "pipeline" in a CK module which they want to be autotuned with a specific API including the following keys in both input and output:

●

●

●

●

●

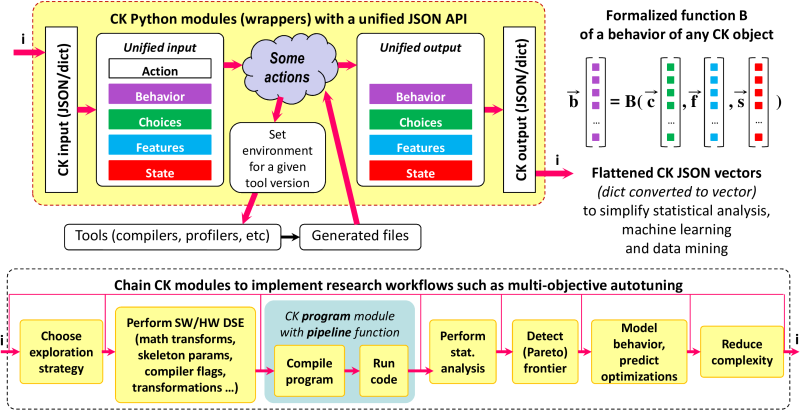

Autotuning can now be implemented as a universal and extensible workflow applied to any object with a matching JSON API by chaining together related CK modules with various exploration strategies, program transformation tools, compilers, program compilation and execution pipeline, architecture simulators, statistical analysis, Pareto frontier filter and other components, as conceptually shown in Figure 7. Researchers can also use unified machine learning CK modules (wrappers to R and scikit-learn [74]) to model the relationship between c, f, s and the observed behavior b, increase coverage, speed up (focus) exploration, and predict efficient optimizations [4, 6]. They can also take advantage of a universal complexity reduction module which can automatically simplify found solutions without changing their behavior, reduce models and features without sacrificing accuracy, localize performance issues via differential analysis [75], reduce programs to localize bugs, and so on.

Even more importantly, our concept of a universal autotuning workflow, knowledge sharing and artifact reuse can help teach students how to apply a well-established holistic and top-down experimental methodology from natural sciences to continuously learn and improve the behavior of complex computer systems [35, 4]. Researchers can continue exposing more design and optimization knobs c, behavioral characteristics b, static and dynamic features f, and run-time state state to optimize and model behavior of various interconnected objects from the workflow depending on their research interests and autotuning scenarios.

Such scenarios are also implemented as CK modules and describe which sets of choices to select, how to autotune them and which multiple characteristics to trade off. For example, existing scenarios include "autotuning OpenCL parameters to improve execution time", "autotuning GCC flags to balance execution time and code size", "autotune LLVM flags to reduce execution time", "automatically fuzzing compilers to detect bugs", "exploring CPU and GPU frequency in terms of execution time and power consumption", "autotuning deep learning algorithms in terms of speed, accuracy, energy, memory usage and costs", and so on.

You can see some of the autotuning scenarios using the following commands:

$ ck pull repo:ck-crowdtuning

$ ck search module --tags="program optimization"

$ ck list programThey can then be invoked from the command line as follows:

$ ck autotune program:[CK program alias] --scenario=[above CK scenario alias]

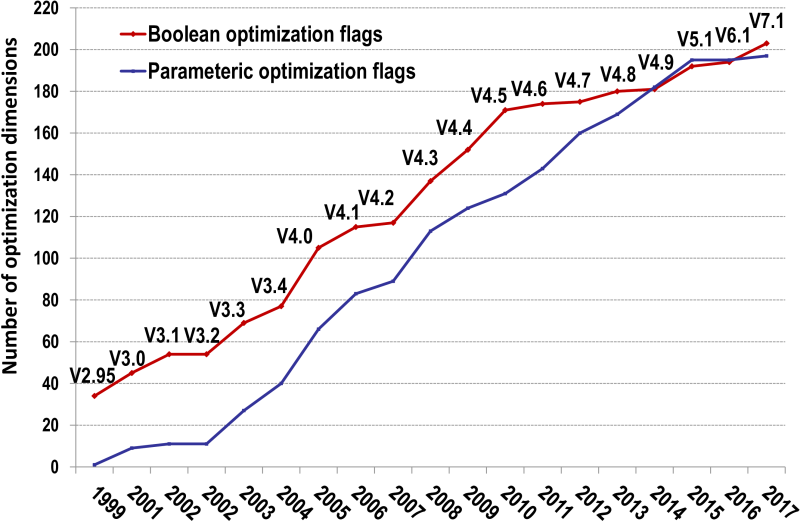

Indeed, the raising complexity of ever changing hardware

made development of compilers very challenging.

Popular GCC and LLVM compilers nowadays include hundreds

of optimizations (Figure 8)

and often fail to produce efficient code (execution time and code size)

on realistic workloads within a reasonable compilation time [10, 58, 76, 77, 4].

Such large design and optimization spaces mean

that hardware and compiler designers can afford to explore

only a tiny fraction of the whole optimization space

using just few ad-hoc benchmarks and data sets on a few architectures

in a tough mission to assemble -O3, -Os and other

optimization levels across all supported architectures and workloads.

Our idea is to keep compiler as a simple collection of code analysis and transformation routines

and separate it from optimization heuristics.

In such case we can use CK autotuning workflow to collaboratively optimize multiple

shared benchmarks and realistic workloads across diverse hardware, exchange optimization results,

and continuously learn and update compiler optimization heuristics for a given hardware

as a compiler plugin.

We will demonstrate this approach by randomly optimizing compiler flags

for susan corners program with aging GCC 4.9.2, the latest GCC 7.1.0

and compare them with Clang 3.8.1.

We already monitor and optimize execution time and code size of this popular image processing

application across different compilers and platforms for many years [24].

That is why we are interested to see if we can still improve it with the CK autotuner

on the latest Raspberry Pi 3 (Model B) devices (RPi3) extensively used for educational purposes.

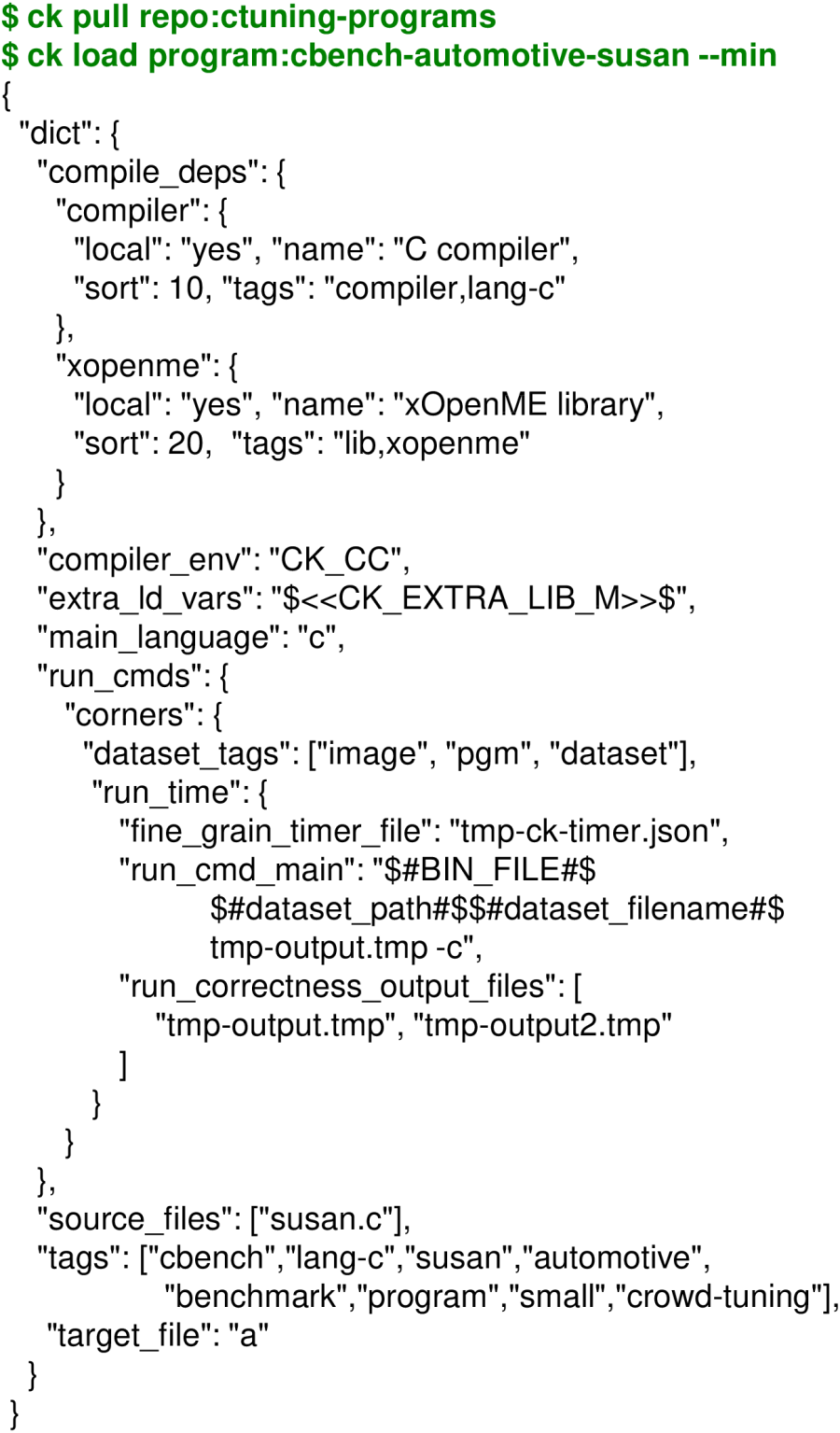

First of all, we added susan program with corners algorithm

to the ctuning-programs repository with the JSON meta information

describing compilation and execution

as shown in Figure 9.

We can then test its compilation and execution by invoking the program pipeline as following:

CK program pipeline will first attempt to detect platform features

(OS, CPU, GPU) and embed them to the input dictionary using key features.

Note that in case of cross-compilation for a target platform different from the host one

(Android, remote platform via SSH, etc),

it is possible to specify such platform using CK os entries and --target_os= flag.

For example, it is possible to compile and run a given CK program for Android via adb as following:

Next, CK will try to resolve software dependencies and prepare environment for compilation

by detecting already installed compilers using CK soft:compiler.* entries

or installing new ones if none was found using CK package:compiler.*.

Each installed compiler for each target

will have an associated CK entry with prepared environment

to let computer systems researchers work with different

versions of different tools:

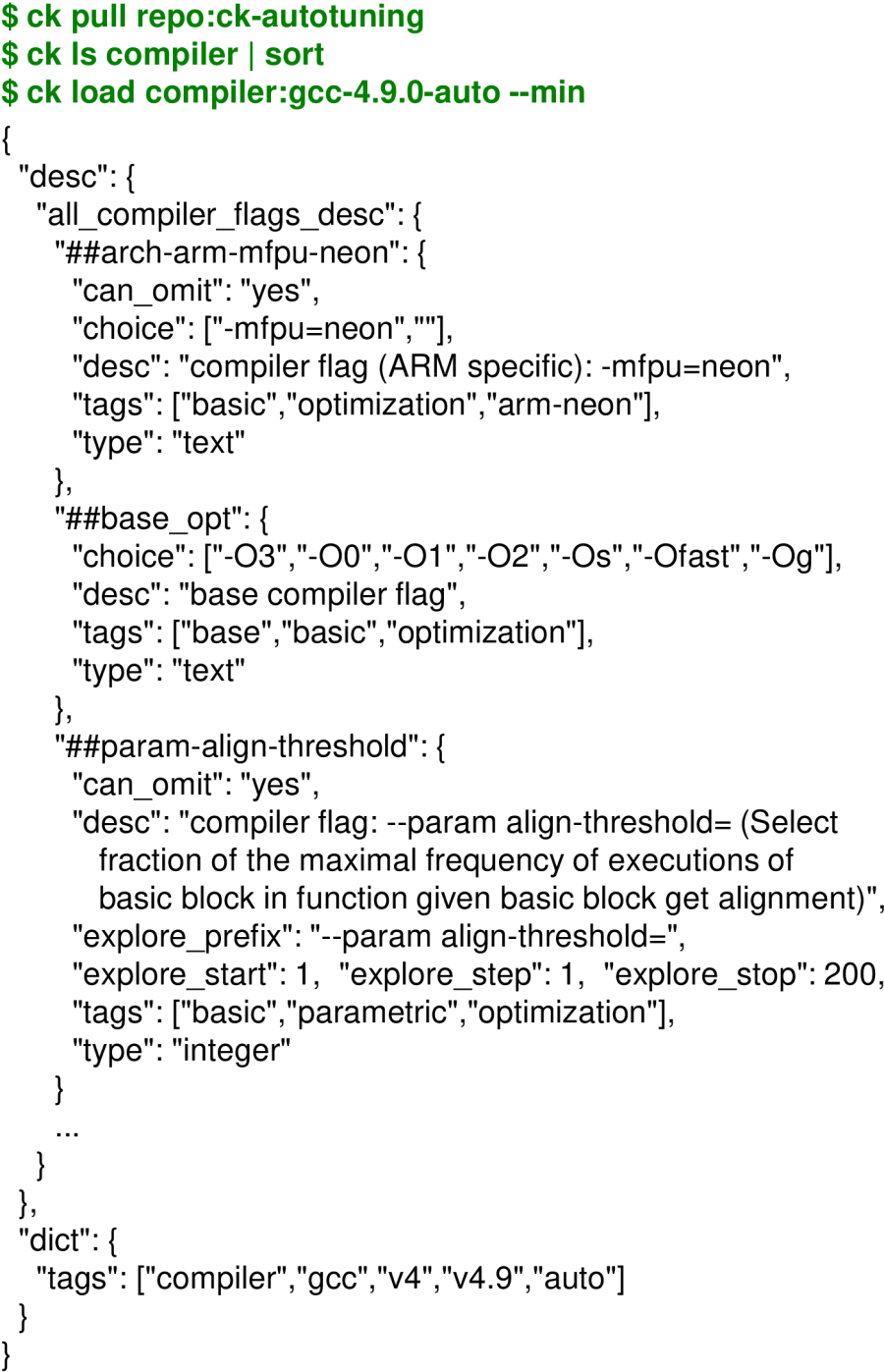

Automatically detected version of a selected compiler is used by CK

to find and preload all available optimization flags

from related compiler:* entries to the choices key

of a pipeline input.

An example of such flags and tags in the CK JSON format

for GCC 4.9 is shown in Figure 10.

The community can continue extending such descriptions for different compilers

including GCC, LLVM, Julia, Open64, PathScale, Java, MVCC, ICC and PGI

using either public ck-autotuning repository

or their own ones.

Finally, CK program pipeline compiles a given program, runs it on a target platform

and fills in sub-dictionary characteristics

with compilation time, object and binary sizes, MD5 sum of the binary, execution time,

used energy (if supported by a used platform), and all other obtained measurements

in the common pipeline dictionary.

We are now ready to implement universal compiler flag autotuning coupled with

this program pipeline.

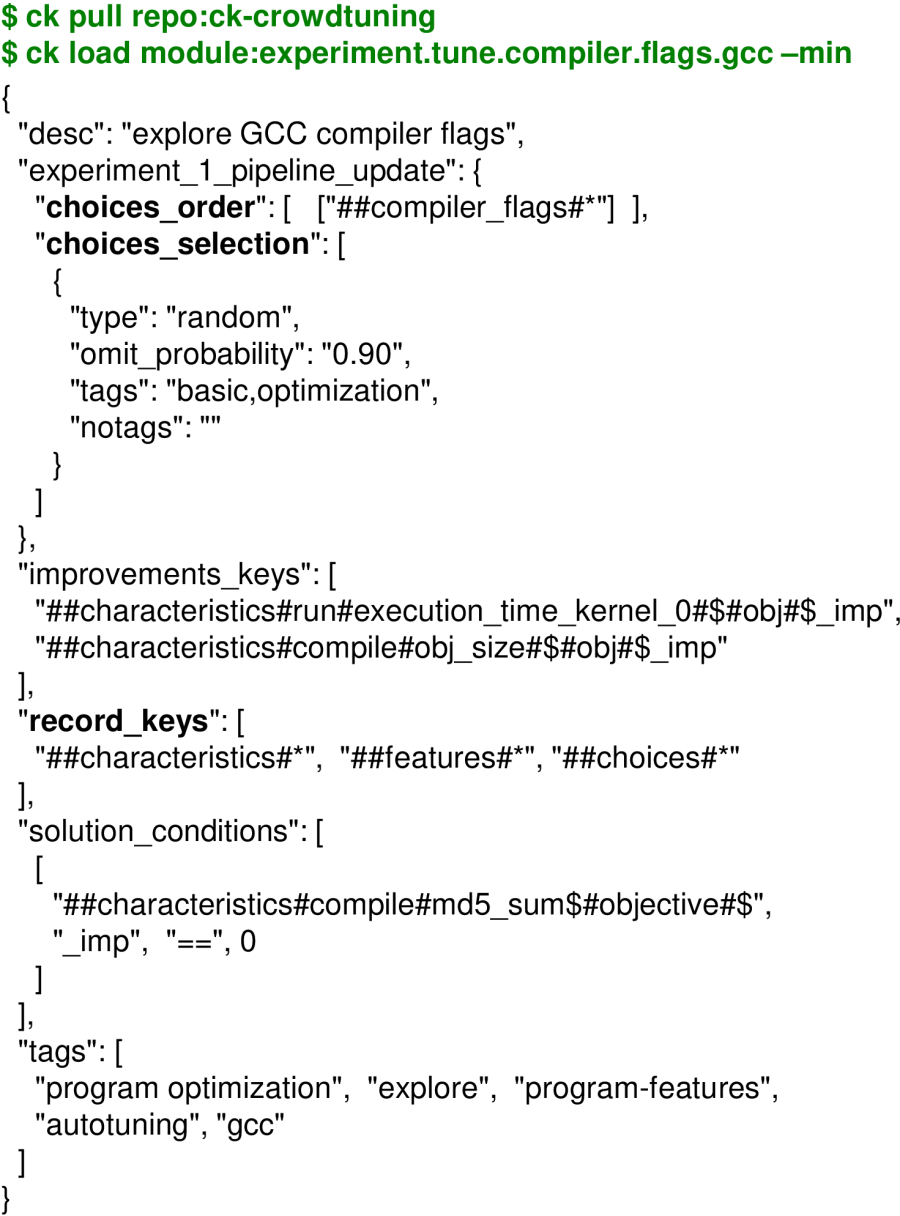

For a proof-of-concept, we implemented GCC compiler flags exploration strategy

which automatically generate N random combinations of compiler flags,

compile a given program with each combination, runs it and record all results

(inputs and outputs of a pipeline) in a reproducible form in a local

CK repository using experiment module from

the ck-analytics

repository:

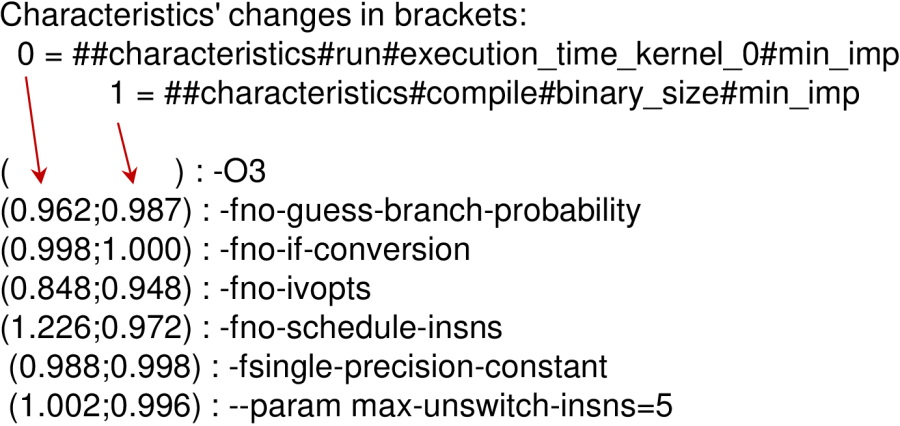

The JSON meta information of this module describes which keys to select

in the program pipeline, how to tune them, and which characteristics to monitor

and record as shown in Figure 11.

Note that a string starting with \#\# is used to reference any key

in a complex, nested JSON or Python dictionary (CK flat key [4]).

Such flat key always starts with \#

followed by \#key if it is a dictionary key or

@position_in_a_list if it is a value in a list.

CK also supports wild cards in such flat keys

such as "\#\#compiler_flags\#\*" and "\#\#characteristics\#\*

to be able to select multiple sub-keys, dictionaries

and lists in a given dictionary.

We can now invoke this CK experimental scenario from the command line as following:

CK will generate 300 random combinations of compiler flags, compile susan corners program

with each combination, run each produced code 3 times to check variation, and record

results in the experiment:tmp-susan-corners-gcc4-300-rnd.

We can now visualize these autotuning results using the following command line:

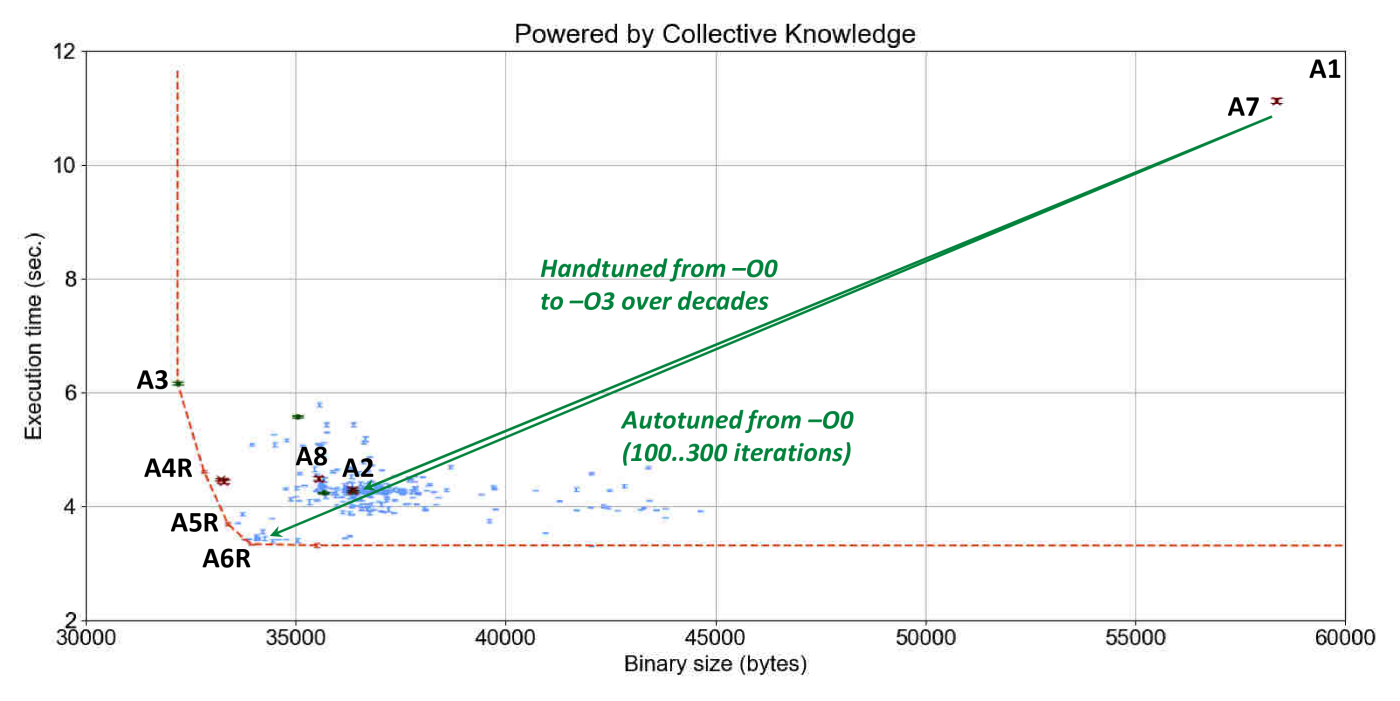

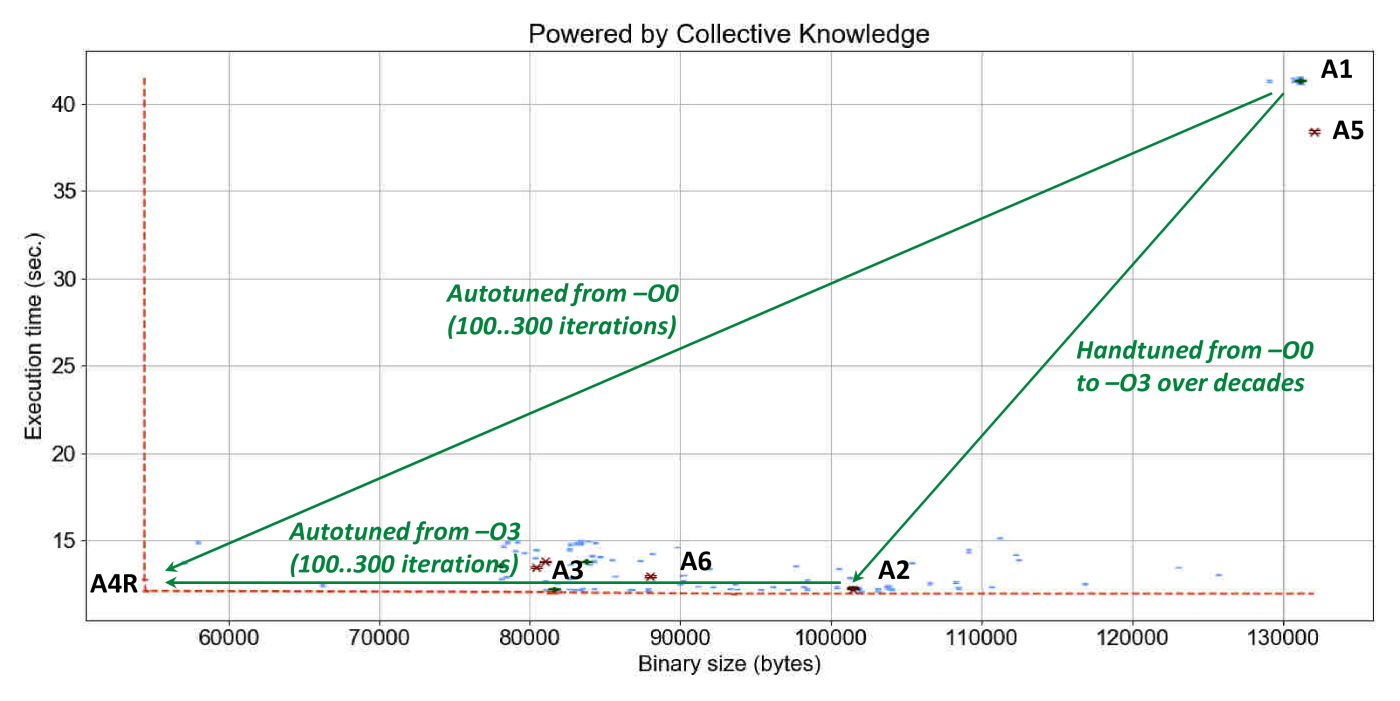

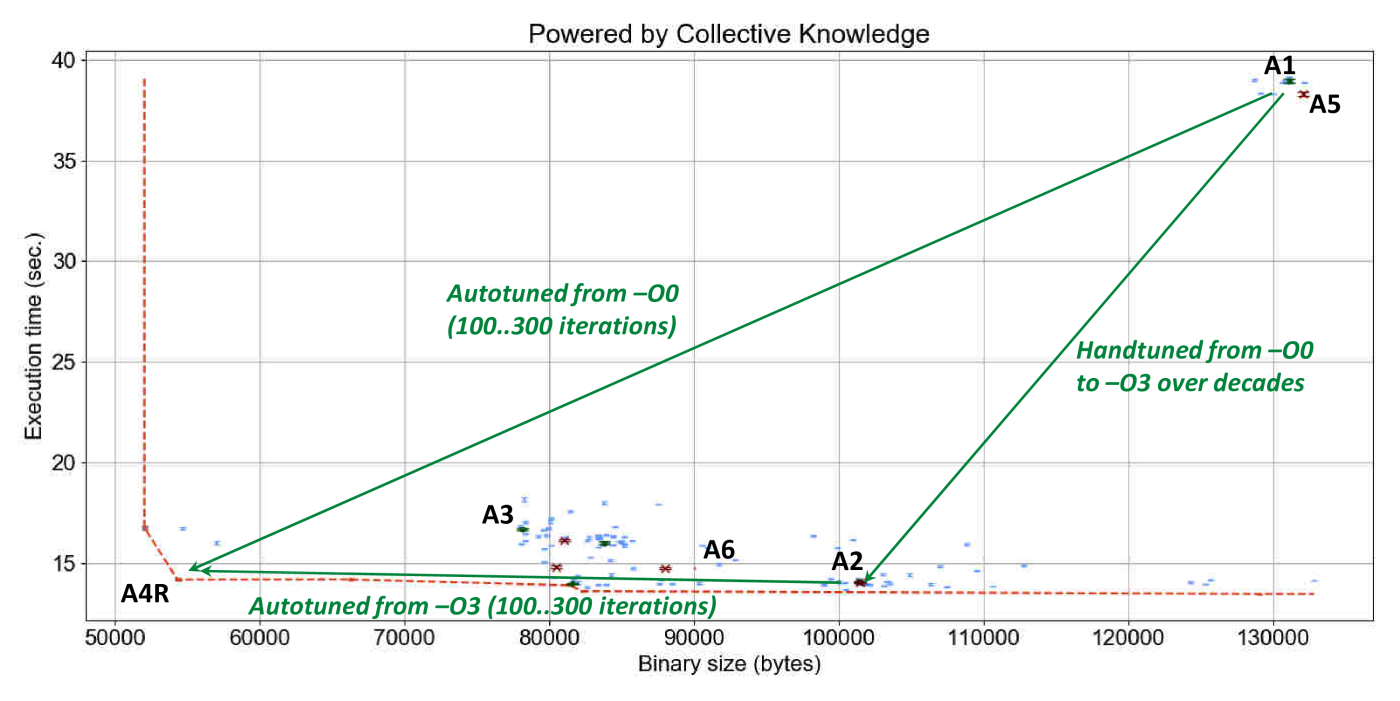

Figure 12 shows a manually annotated graph

with the outcome of GCC 4.9.2 random compiler flags autotuning

applied to susan corners on an RPi3 device in terms of execution

time with variation and code size.

Each blue point on this graph is related to one combination of random compiler flags.

The red line highlights the frontier of all autotuning results (not necessarily Pareto optimal)

which trade off execution time and code size during multi-objective optimization.

We also plotted points when default GCC compilation is used without any flags

or with -O3 and -Os optimization levels.

Finally, we decided to compare optimization results with Clang 3.8.1 also available on RPi3.

Besides showing that GCC -O3 (optimization choice A2)

and Clang -O3 (optimization choice A8) can produce a very similar code,

these results confirm well that it is indeed possible to automatically obtain execution time

and binary size of -O3 and -Os levels in comparison with non-optimized code

within tens to hundreds autotuning iterations (green improvement vectors with 3.6x execution time speedup

and 1.6x binary size improvement).

The graph also shows that it is possible to improve best optimization level -O3

much further and obtain 1.3x execution time speedup (optimization solution A6R

or obtain 11% binary size improvement without sacrifying original execution time

(optimization solution A4R).

Such automatic squeezing of a binary size without sacrificing performance

can be very useful for the future IoT devices.

Note that it is possible to browse all results in a user-friendly way

via web browser using the following command:

CK will then start internal CK web server

available in the ck-web

repository, will run a default web browser, and will

open a web page with all given experimental results.

Each experiment on this page has an associated button

with a command line to replay it via CK such as:

CK will then attempt to reproduce this experiment using the same input

and then report any differences in the output.

This simplifies validation of shared experimental results

(optimizations, models, bugs) by the community

and possibly with a different software and hardware setup

(CK will automatically adapt the workflow to a user platform).

We also provided support to help researchers

visualize their results as interactive graphs

using popular D3.js library as demonstrated in this

link.

Looking at above optimization results one may notice

that one of the original optimization solutions on a frontier A4

has 40 optimization flags, while A4R only 7 as shown in Table 1.

The natural reason is that not all randomly selected flags contribute to improvements.

That is why we developed a simple and universal complexity reduction algorithm.

It iteratively and randomly removes choices from a found solution one by one

if they do not influence monitored characteristics such as execution time and code size

in our example.

Such complexity reduction (pruning) of an existing solution can be invoked as following

(flag --prune_md5 tells CK to exclude a given choice without running code

if MD5 of a produced binary didn't change, thus considerably speeding up flag pruning):

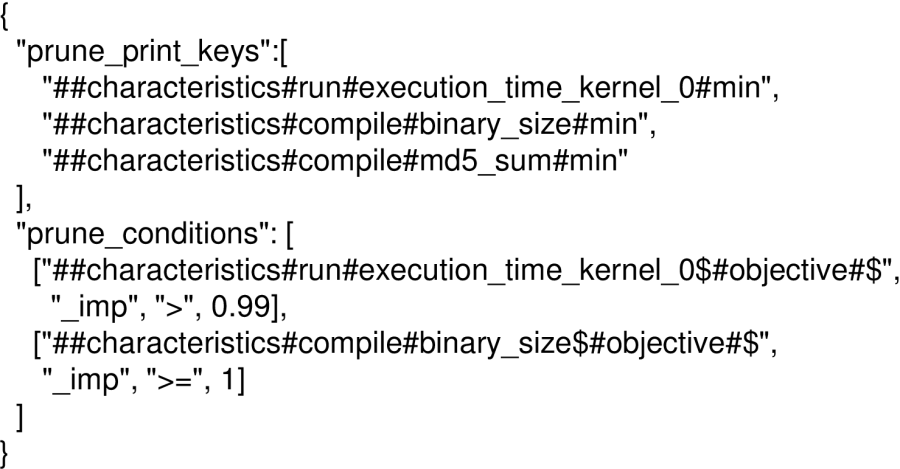

The 'prune.json' file describes conditions on program pipeline keys

when a given choice should be removed as shown in Figure 13.

Such universal complexity reduction approach helps software engineers better understand

individual contribution of each flag to improvements or degradations of all monitored

characteristics such as execution time and code size as shown in Figure 14.

Asked by compiler developers, we also provided an extension to our complexity reduction module

to turn off explicitly all available optimization choices one by one

if they do not influence found optimization result.

Table 2 demonstrates this approach and shows all compiler optimizations contributing to the found optimization solution.

It can help improve internal optimization heuristics, global optimization levels such as -O3,

and improve machine learning based optimization predictions.

This extension can be invoked by adding flags --prune_invert --prune_invert_do_not_remove_key

when reducing complexity of a given solution such as:

We have been analyzing already aging GCC 4.9.2 because

it is still the default compiler for Jessy Debian distribution on RPi3.

However, we would also like to check how our universal autotuner

works with the latest GCC 7.1.0.

Since there is no yet a standard Debian GCC 7.1.0 package available for RPi3,

we need to build it from scratch.

This is not a straightforward task since we have to pick up correct

configuration flags which will adapt GCC build to quite outdated RPi3 libraries.

However, once we manage to do it, we can automate this process

using CK package module.

We created a public ck-dev-compilers repository

to automate building and installation of various compilers including GCC and LLVM via CK.

It is therefore possible to install GCC 7.1.0 on RPi3 as following

(see Appendix or GitHub repository ReadMe file for more details):

This CK package has an install.sh script which is customized

using environment variables or --env flags to build GCC for a target platform.

The JSON meta data of this CK package provides optional software dependencies

which CK has to resolve before installation (similar to CK compilation).

If installation succeeded, you should be able to see two prepared environments

for GCC 4.9.2 and GCC 7.1.0 which now co-exist in the system.

Whenever we now invoke CK autotuning, CK software and package manager

will detect multiple available versions of a required software dependency

and will let you choose which compiler version to use.

Let us now autotune the same susan corners program

by generating 300 random combinations of GCC 7.1.0 compiler flags

and record results in the experiment:tmp-susan-corners-gcc7-300-rnd:

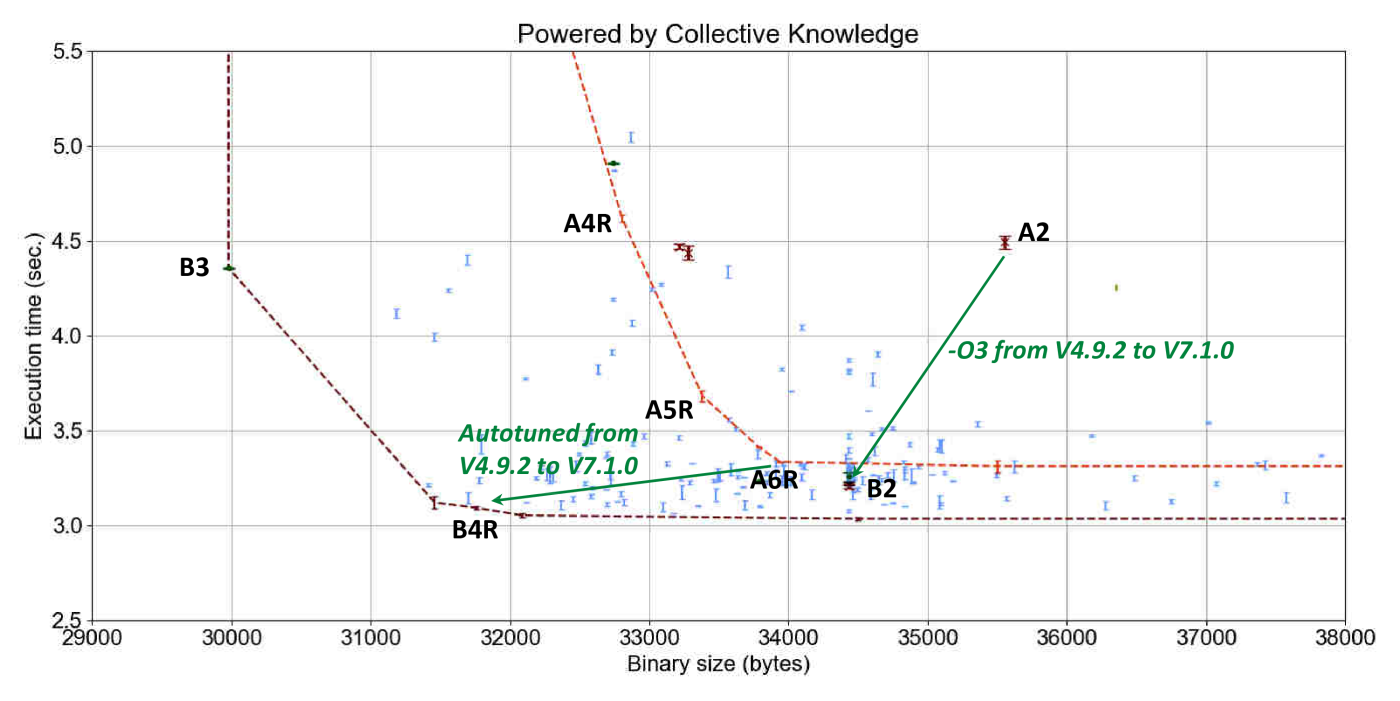

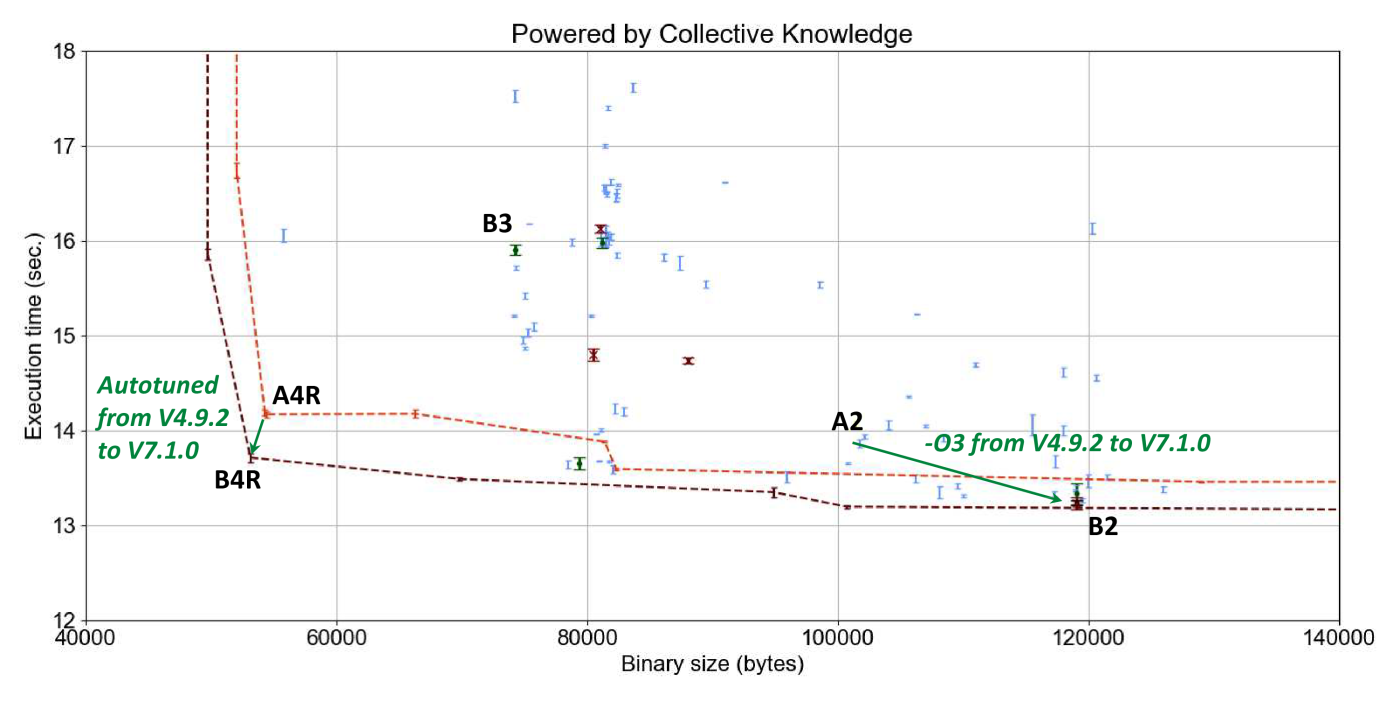

Figure 15 shows the results of such GCC 7.1.0

compiler flag autotuning (B points) and compares them

against GCC 4.9.2 (A points).

Note that this graph is also available in interactive form online.

It is interesting to see considerable improvement in execution time of susan corners

when moving from GCC 4.9 to GCC 7.1 with the best optimization level -O3.

This graph also shows that new optimization added during the past 3 years opened up

many new opportunities thus considerably expanding autotuning frontier (light red

dashed line versus dark red dashed line).

Autotuning only managed to achieve a modest improvement of a few percent over -O3.

On the other hand, GCC -O3 and -Os are still far from achieving

best trade-offs for execution time and code size.

For example, it is still possible to improve a program binary size

by 10% (reduced solution B4R) without degrading best achieved

execution time with the -O3 level (-O3), or improve

execution time of -Os level by 28% while slightly degrading code size by 5%.

Note that for readers' convenience we added scripts to reproduce and validate

all results from this section to the following CK entries:

These results confirm that it is difficult to manually prepare compiler optimization

heuristic which can deliver good trade offs between execution time and code size

in such a large design and optimization spaces.

They also suggest that either susan corners or similar code

was eventually added to the compiler regression testing suite,

or some engineer check it manually and fixed compiler heuristic.

However, there is also no guarantee that future GCC versions will still

perform well on the susan corners program.

Neither these results guarantee that GCC 7.1.0

will perform well on other realistic workloads or devices.

Common experimental frameworks can help tackle this problem too by

crowdsourcing autotuning across diverse hardware provided by volunteers and combining it with online

classification, machine learning and run-time adaptation [5, 66, 6].

However, our previous frameworks did not cope well with "big data" problem

(cTuning framework [5, 9] based on MySQL database)

or were too "heavy" (Collective Mind aka cTuning 3 framework [4]).

Extensible CK workflow framework combined with our cross-platform package manager,

internal web server and machine learning, helped solve most of the above issues.

For example, we introduced a notion of a remote repository in the CK -

whenever such repository is accessed CK simply forward all JSON requests

to an appropriate web server.

CK always has a default remote repository remote-ck connected

with a public optimization repository running CK web serve

at cKnowledge.org/repo:

For example, one can see publicly available experiments from command line as following:

Such organization allows one to crowdsource autotuning, i.e. distributing autotuning

of given shared workloads in a cloud or across diverse platforms simply by using remote

repositories instead of local ones.

On the other hand, it does not address the problem of optimizing larger applications

with multiple hot spots.

It also does not solve the "big data" problem

when a large amount of data from multiple participants

needed for reproducibility will be continuously aggregated in a CK server.

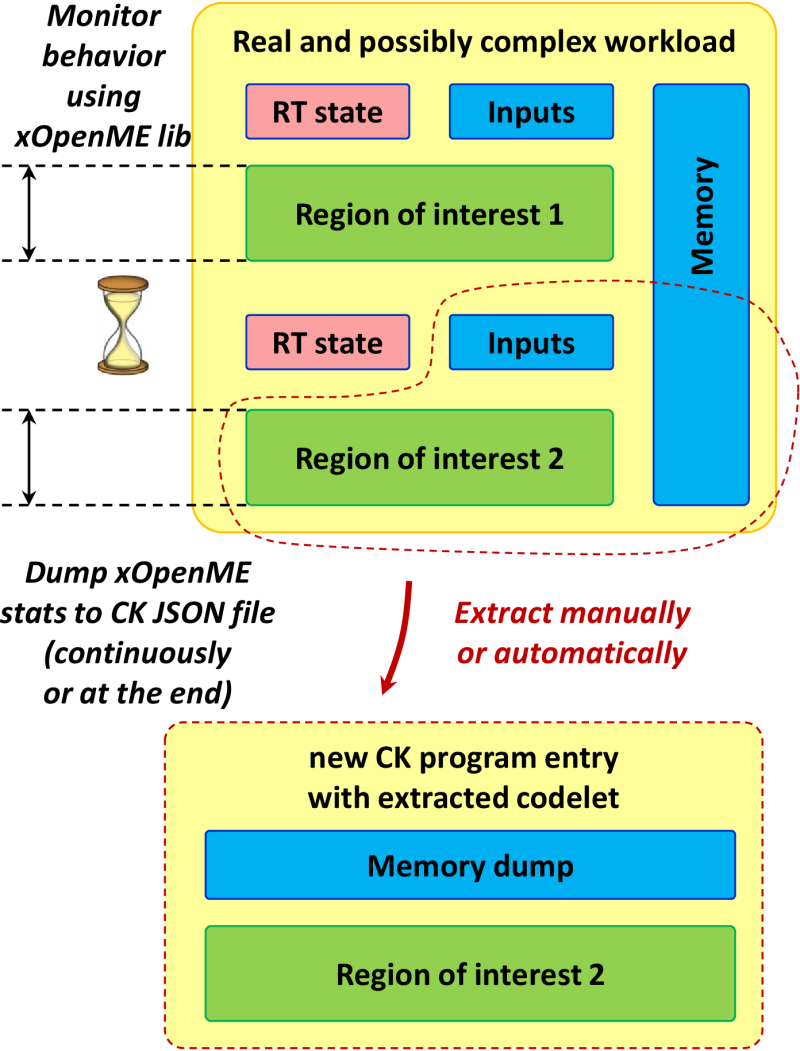

However, we have been already addressing the first problem by either

instrumenting, monitoring and optimizing hot code regions in large applications

using our small "XOpenME" library, or even extracting such code regions

from a large application with a run-time data set and registering them

in the CK as standalone programs (codelets or computational species)

as shown in Figure 16

( [4]).

In the MILEPOST project [24]

we used a proprietary "codelet extractor" tool from CAPS Entreprise

(now dissolved) to automatically extract such hot spots with their data sets

from several real software projects and 8 popular benchmark suits

including NAS, MiBench, SPEC2000, SPEC2006, Powerstone, UTDSP and SNU-RT.

We shared those of them with a permissive license as CK programs

in the ctuning-programs repository

to be compatible with the presented CK autotuning workflow.

We continue adding real, open-source applications and libraries as CK program entries

(GEMM, HOG, SLAM, convolutions) or manually extracting and sharing interesting code

regions from them with the help of the community.

Such a large collection of diverse and realistic workloads

should help make computer systems research more applied and practical.

As many other scientists, we also faced a big data problem when continuously

aggregating large amounts of raw optimization data during crowd-tuning

for further processing including machine learning [9].

We managed to solve this problem in the CK by using

online pre-processing of raw data and online classification

to record only the most efficient optimization solutions

(on a frontier in case of multi-objective autotuning)

along with unexpected behavior (bugs and numerical

instability) [6].

It is now possible to invoke crowd-tuning of GCC compiler flags (improving execution time) in the CK as following:

or

In contrast with traditional autotuning, CK will first query remote-ck repository

to obtain all most efficient optimization choices aka solutions (combinations of random compiler flags in our example)

for a given trade-off scenario (GCC compiler flag tuning to minimize execution time), compiler version,

platform and OS.

CK will then select a random CK program (computational species),

compiler and run it with all these top optimizations,

and then try N extra random optimizations (random combinations of GCC flags)

to continue increasing design and optimization space coverage.

CK will then send the highest improvements of monitored characteristics

(execution time in our example) achieved for each optimization solution as well as worst degradations

back to a public server.

If a new optimization solution if also found during random autotuning,

CK will assign it a unique ID (solution_uid

and will record it in a public repository.

At the public server side, CK will merge improvements and degradations for a given

program from a participant with a global statistics while recording how many programs

achieved the highest improvement (best species) or worst degradation (worst species) for a given optimization

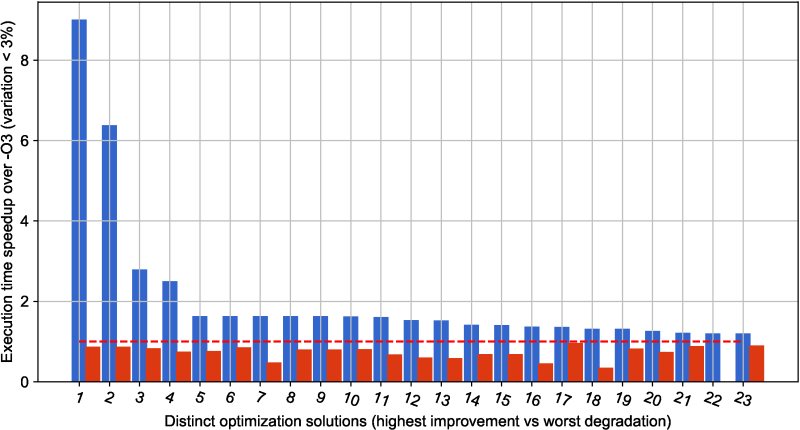

as shown in Figure 17.

This figure shows a snapshot of public optimization results

with top performing combinations of GCC 4.9.2 compiler flags

on RPi3 devices which minimize execution time of shared CK workloads

(programs and data sets) in comparison with -O3 optimization level.

It also shows the highest speedup and the worse degradation achieved

across all CK workloads for a given optimization solution, as well

as a number of workloads where this solution was the best or the worst

(online classification of all optimization solutions).

Naturally this snapshot automatically generated from the public repository

at the time of publication may slightly differ from continuously updated

live optimization results available at this link.

These results confirm that GCC 4.9.2 misses many optimization opportunities

not covered by -O3 optimization level.

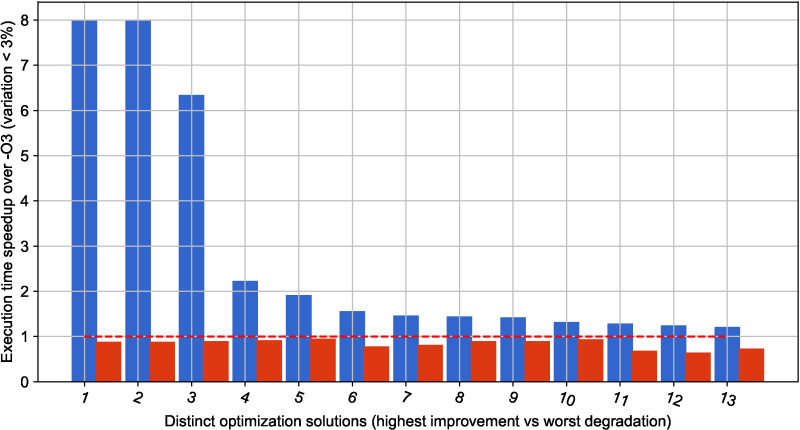

Figure 18 with optimization results

for GCC 7.1.0 also confirms that this version was considerably improved

in comparison with GCC 4.9.2

(latest live results are available in our public optimization repository

at this link):

there are fewer efficient optimization solutions found during crowd-tuning

14 vs 23 showing the overall improvement of the -O3 optimization level.

Nevertheless, GCC 7.1.0 still misses many optimization opportunities

simply because our long-term experience suggests that it is infeasible

to prepare one universal and efficient optimization heuristics

with good multi-objective trade-offs for all continuously

evolving programs, data sets, libraries, optimizations and platforms.

That is why we hope that our approach of combining a common workflow framework

adaptable to software and hardware changes, public repository of optimization knowledge,

universal and collaborative autotuning across multiple hardware platforms

(e.g. provided by volunteers or by HPC providers), and community involvement

should help make optimization and testing of compilers

more automatic and sustainable [6, 35].

Rather than spending considerable amount of time on writing their own autotuning and crowd-tuning

frameworks, students and researchers can quickly reuse shared workflows,

reproduce and learn already existing optimizations, try to improve optimization heuristics,

and validate their results by the community.

Furthermore, besides using -Ox compiler levels, academic and industrial users

can immediately take advantage of various shared optimizations solutions automatically

found by volunteers for a given compiler and hardware via CK using solution_uid flag.

For example, users can test the most efficient combination of compiler flags

which achieved the highest speedup for GCC 7.1.0 on RPi3

(see "Copy CID to clipboard for a given optimization solution at this

link)

for their own programs using CK:

or

We can now autotune any of these programs via CK as described in Section 4.

For example, the following command will autotune zlib decode workload

with 150 random combinations of compiler flags including parametric and architecture

specific ones, and will record results in a local repository:

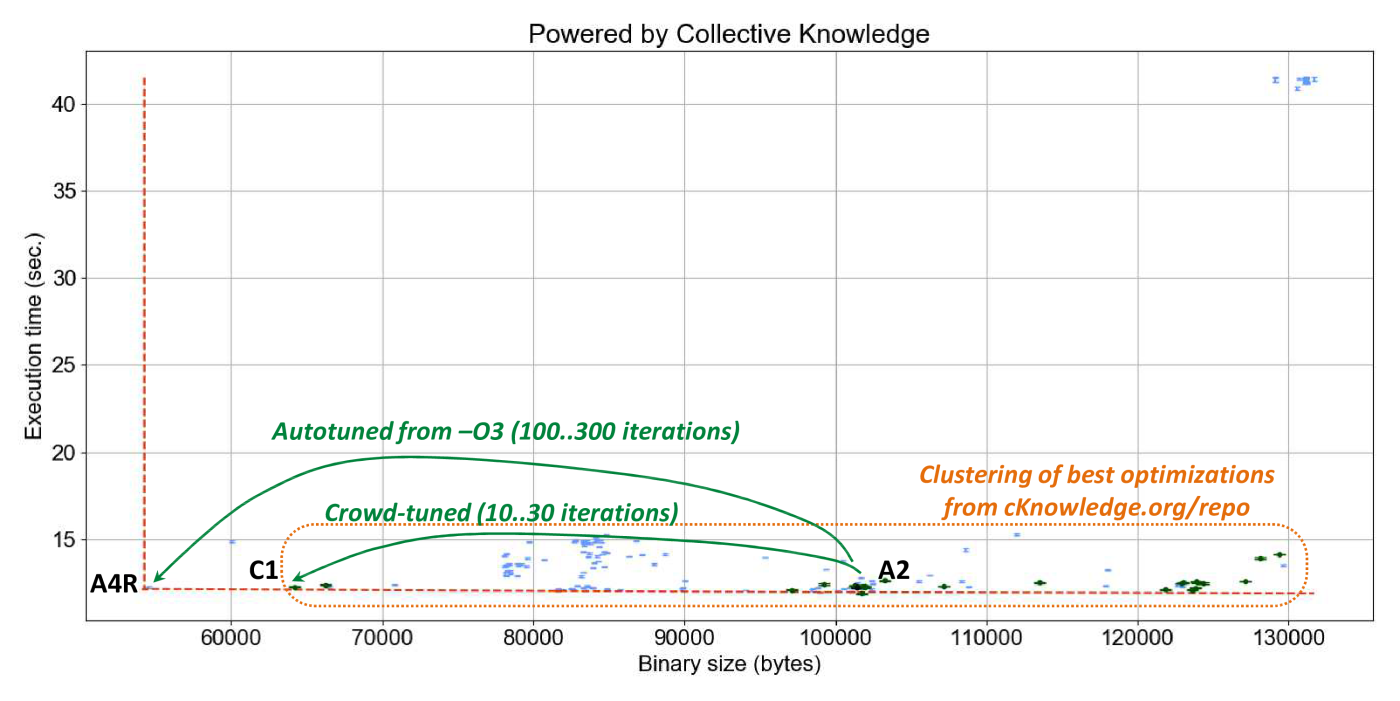

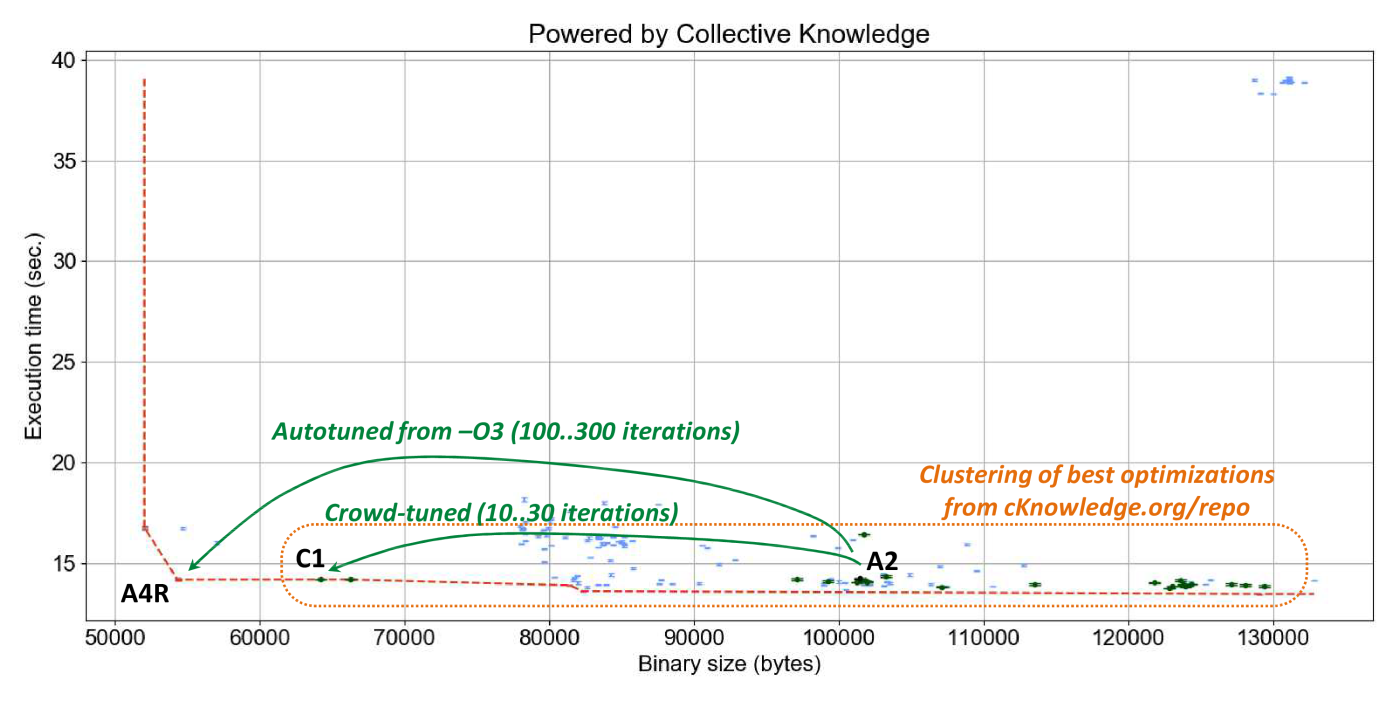

Figure 19

(link with interactive graph)

shows a manually annotated graph with the outcome of such autotuning

when using GCC 4.9.2 compiler on RPi3 device

in terms of execution time with variation and code size.

Each blue point on this graph is related to one combination of random compiler flags.

The red line highlights the frontier of all autotuning results

to let users trade off execution time and code size

during multi-objective optimization.

Similar to graphs in Section 4, we also plotted points

when using several main GCC and Clang optimization levels.

In contrast with susan corners workload, autotuning did not improve execution time

of zlib decode over -O3 level most likely because this algorithm is present

in many benchmarking suits.

On the other hand, autotuning impressively improved code size over -O3

by nearly 2x without sacrificing execution time, and by 1.5x with 11% execution time

improvement over -Os (reduced optimization solution A4R),

showing that code size optimization is still a second class citizen.

Since local autotuning can still be quite costly (150 iterations to achieve above results),

we can now first check 10..20 most efficient combinations of compiler flags

already found and shared by the community for this compiler and hardware

(Figure 17).

Note that programs from this section did not participate in crowd-tuning

to let us have a fair evaluation of the influence of shared optimizations

on these programs similar to leave-one-out cross-validation in machine learning.

Figure 20 shows "reactions"

of zlib decode to these optimizations in terms of execution time and code size

(the online interactive graph).

We can see that crowd-tuning solutions indeed cluster in a relatively small area

close to -O3 with one collaborative solution (C1) close to the

best optimization solution found during lengthy autotuning (A4R)

thus providing a good trade off between autotuning time, execution time and code size.

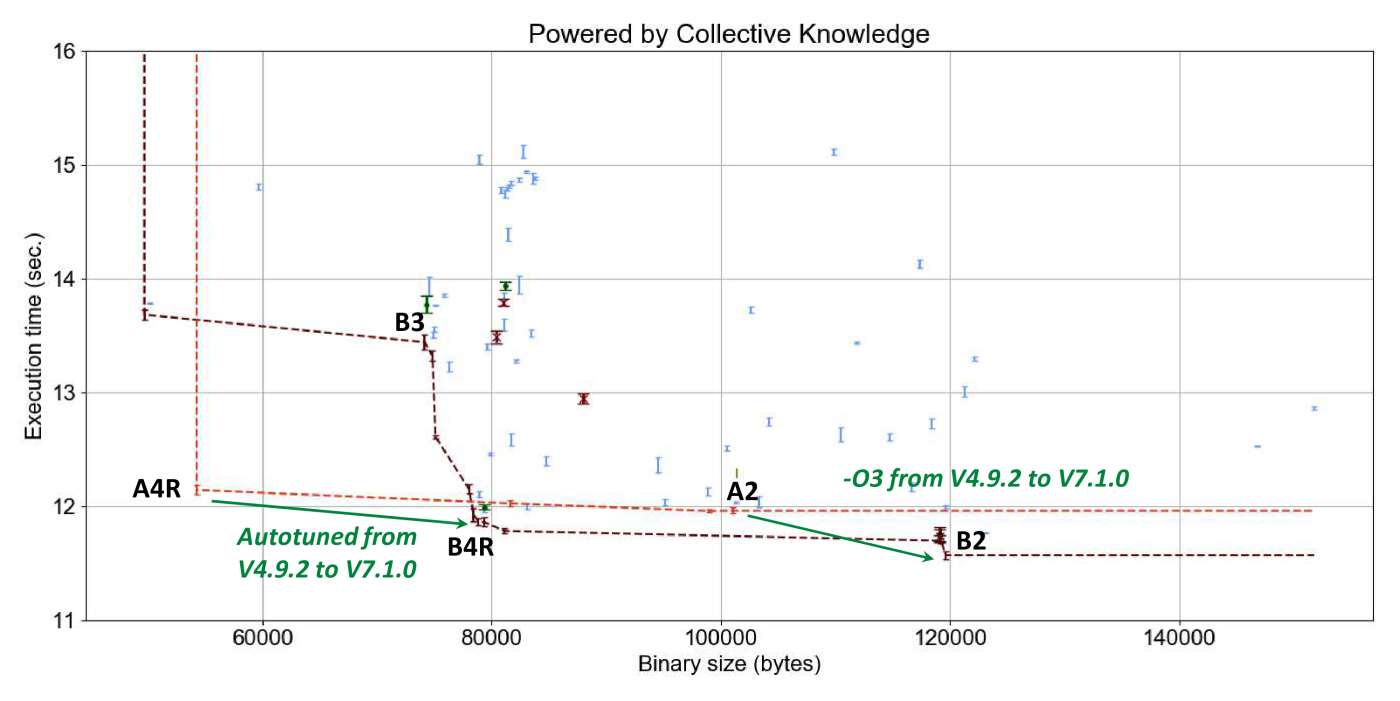

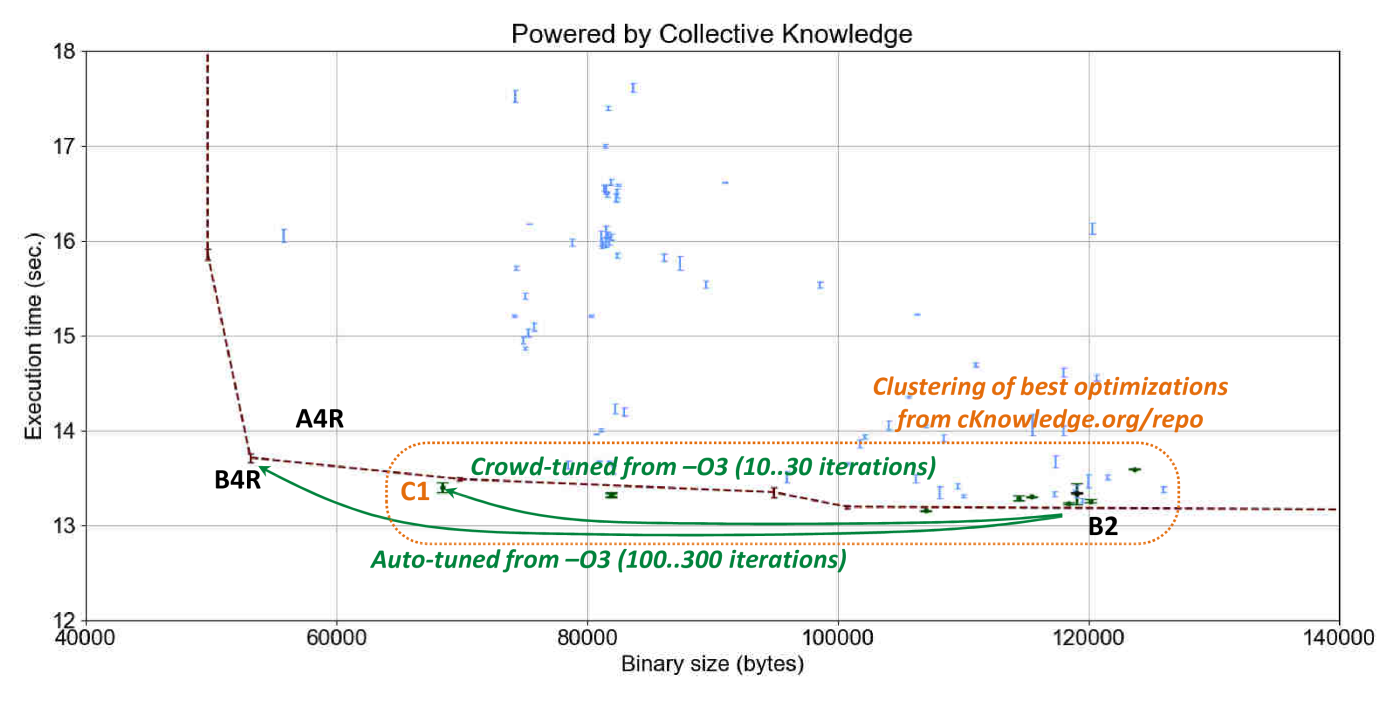

Autotuning zlib decode using GCC 7.1.0 revels even more interesting results

in comparison with susan corners as shown in Figure 21

(the online interactive graph).

While there is practically no execution time improvements when switching from GCC 4.9.2 to GCC 7.1.0

on -O3 and -Os optimization levels, GCC 7.1.0 -O3 considerably degraded code size by nearly 20%.

Autotuning also shows few opportunities on GCC 7.1.0 in comparison with GCC 4.9.2

where the best found optimization B4R is worse in terms of a code size than A4R also by around 20%.

These results highlight issues which both end-users and compiler designers face

when searching for efficient combinations of compiler flags or preparing the

default optimization levels -Ox.

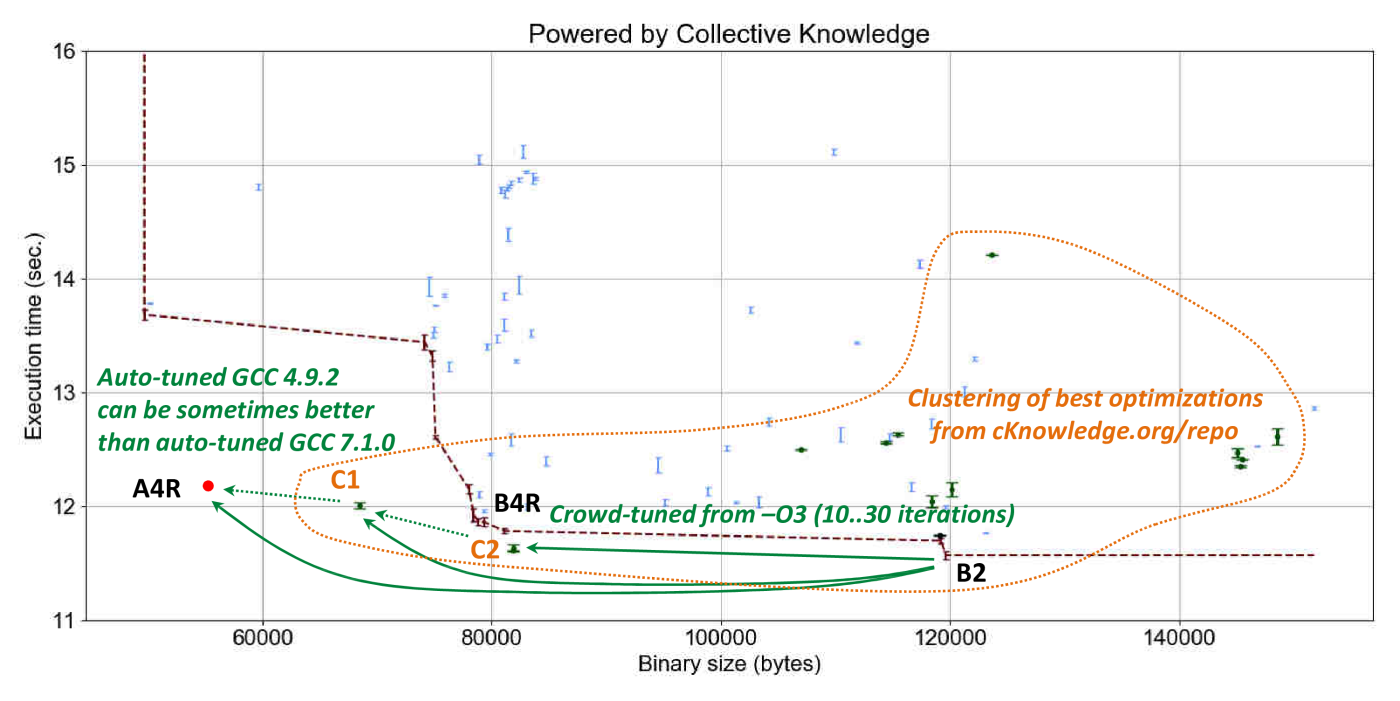

CK crowd-tuning can assist in this case too - Figure 22

shows reactions of zlib decode to the most efficient combinations of GCC 7.1.0 compiler flags

shared by the community for RPi3

(the online interactive graph).

Shared optimization solution C2 achieved the same results in terms of execution time and code size

as reduced solution B4R found during 150 random autotuning iterations.

Furthermore, another shared optimization solution C1 improved code size by 15% in comparison

with GCC 7.1.0 autotuning solution B4R and is close to the best solution GCC 4.9.2 autotuning solution A4R.

These results suggest that 150 iterations with random combinations of compiler flags

may not be enough to find an efficient solution for zlib decode.

In turn, crowd-tuning can help considerably accelerate and focus such optimization space exploration.

We performed the same autotuning and crowd-tuning experiments for zlib encode workload

with the results shown in Figures 23, 24, 25, 26.

The results show similar trend that -O3 optimization level of both GCC 4.7.2 and GCC 7.1.0

perform well in terms of execution time, while there is the same degradation in the code size when moving to a new compiler

(since we monitor the whole zlib binary size for both decode and encode functions).

Crowd-tuning also helped improve the code size though optimizations A4R, B4R and C1

are not the same as in case of zlib decode.

The reason is that algorithms are different and need different optimizations

to keep execution time intact while improving code size.

Such result provides an extra motivation for function-level optimizations

already available in GCC.

Besides zlib, we applied crowd-tuning with the best found and shared optimizations

to other RPi programs using GCC 4.9.2 and GCC 7.1.0.

Table 3 shows reactions of these optimizations

with the best trade-offs for execution time and code size.

One may notice that though GCC 7.1.0 -O3 level improves execution time

of most of the programs apart from a few exceptions, it also considerably degrades

code size in comparison with GCC 4.9.2 -O3 level.

These results also confirm that neither -O3 nor -Os on both

GCC 4.9.2 and GCC 7.1.0 achieves the best trade-offs for execution

time and code size thus motivating again our collaborative and continuous optimization

approach.

Indeed, a dozen of shared most efficient optimizations at cKnowledge.org/repo

is enough to either improve execution time of above programs by up to 1.5x or code size by up to 1.8x or even

improve both size and speed at the same time.

It also helps end-users find the most efficient optimization no matter which compiler, environment and hardware are used.

We can also notice that 11 workloads (computational species)

share -O3 -fno-inline -flto combination of flags

to achieve the best trade-off between execution time and code size.

This result supports our original research to use workload features,

hardware properties, crowd-tuning and machine learning

to predict such optimizations [5, 24, 6].

However, in contrast with the past work, we are now able

to gradually collect a large, realistic (i.e. not randomly

synthesized) set of diverse workloads with the help of the

community to make machine learning statistically meaningful.

All scripts to reproduce experiments from this section are available in the following CK entries:

Our CK-based customizable autotuning workflow can assist in creating,

learning and improving such collaborative fuzzers

which can distribute testing across diverse platforms and workloads

provided by volunteers while sharing and reproducing bugs.

We just need to retarget our autotuning workflow to search for

bugs instead of or together with improvements in performance,

energy, size and other characteristics.

We prepared an example scenario experiment.tune.compiler.flags.gcc.fuzz

to randomly generate compiler flags for any GCC and record only cases

with failed program pipeline.

One can use it in a same way as any CK autotuning while selecting

above scenario as following:

It is then possible to view all results with unexpected behavior

in a web browser and reproduce individual cases

on a local or different machine as following:

We performed the same auto-fuzzing experiments for susan corners program

with both GCC 4.9.2 and GCC 7.1.0 as in Section 4.

These results are available in the following CK entries:

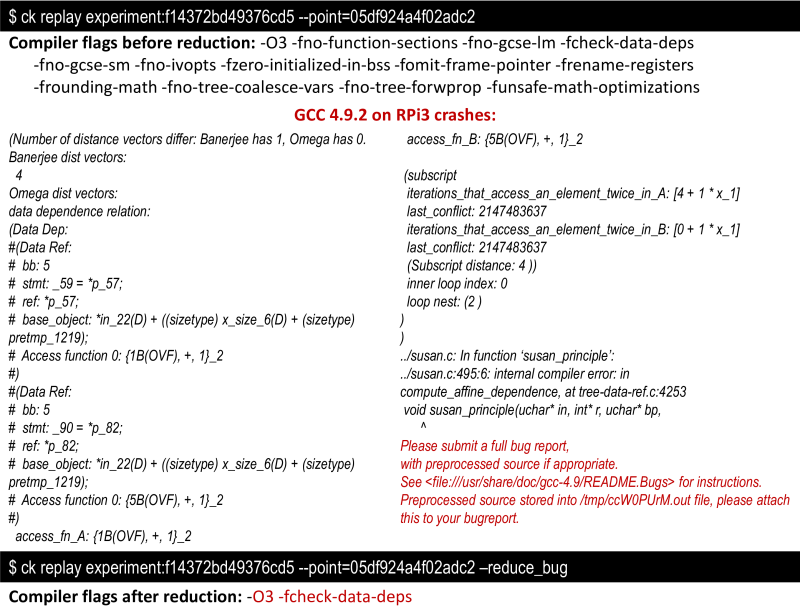

Figure 27 shows a simple example of reproducing

a GCC bug using CK together with the original random combination of flags

and the reduced one.

GCC flag -fcheck-data-deps compares several passes for dependency analysis

and report a bug in case of discrepancy.

Such discrepancy was automatically found when autotuning susan corners

using GCC 4.9.2 on RPi3.

Since CK automatically adapts to a user environment, it is also possible

to reproduce the same bug using a different compiler version.

Compiling the same program with the same combination of flags on the same platform

using GCC 7.1.0 showed that this bug has been fixed in the latest compiler.

We hope that our extensible and portable benchmarking workflow will help students and engineers

prototype and crowdsource different types of fuzzers.

It may also assist even existing projects [81, 82]

to crowdsource fuzzing across diverse platforms and workloads.

For example, we collaborate with colleagues from Imperial College London

to develop CK-based, continuous and collaborative OpenGL and OpenCL compiler

fuzzers [83, 84, 85]

while aggregating results from users in public or private repositories

( link to public OpenCL fuzzing results across diverse desktop and mobile platforms).

All scripts to reproduce experiments from this section are available in the following CK entry:

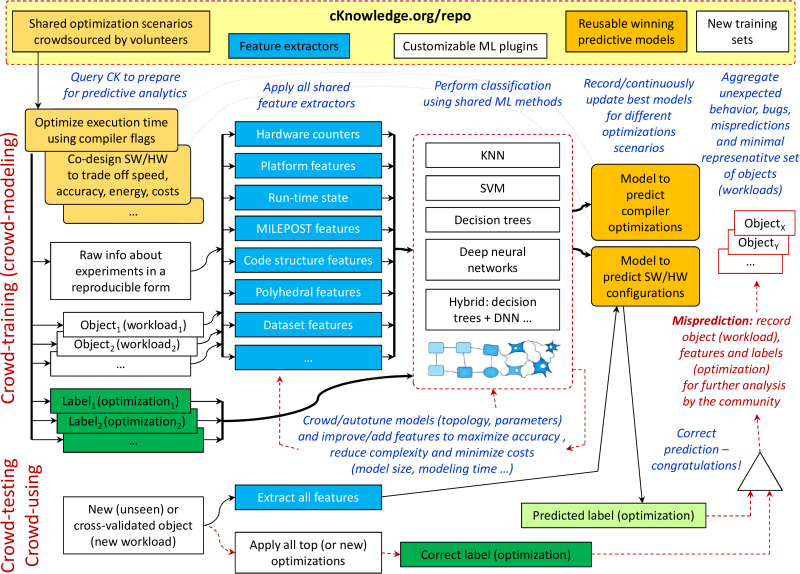

To demonstrate our approach, we converted all our past research artifacts

on machine learning based optimization and SW/HW co-design

to CK modules.

We then assembled them to a universal Collective Knowledge workflow

shown in Figure 28.

If you do not know about machine learning based compiler optimizations,

we suggest that you start from our MILEPOST GCC paper [24]

to make yourself familiar with terminology

and methodology for machine learning training

and prediction used further.

Next, we will briefly demonstrate the use of this customizable workflow

to continuously classify shared workloads presented in this report

in terms of the most efficient compiler optimizations

while using MILEPOST models and features.

First, we query the public CK repository [38]

to collect all optimization statistics together with all associated objects

(workloads, data sets, platforms) for a given optimization scenario.

In our compiler flag optimization scenario, we retrieve all

most efficient compiler flags combinations found and shared

by the community when crowd-tuning GCC 4.9.2 on RPi3 device

(Figure 17).

Note that our CK crowd-tuning workflow also continuously applies

such optimization to all shared workloads.

This allows us to analyze "reaction" of any given workload

to all most efficient optimizations.

We can then group together those workloads which exhibit similar reactions.

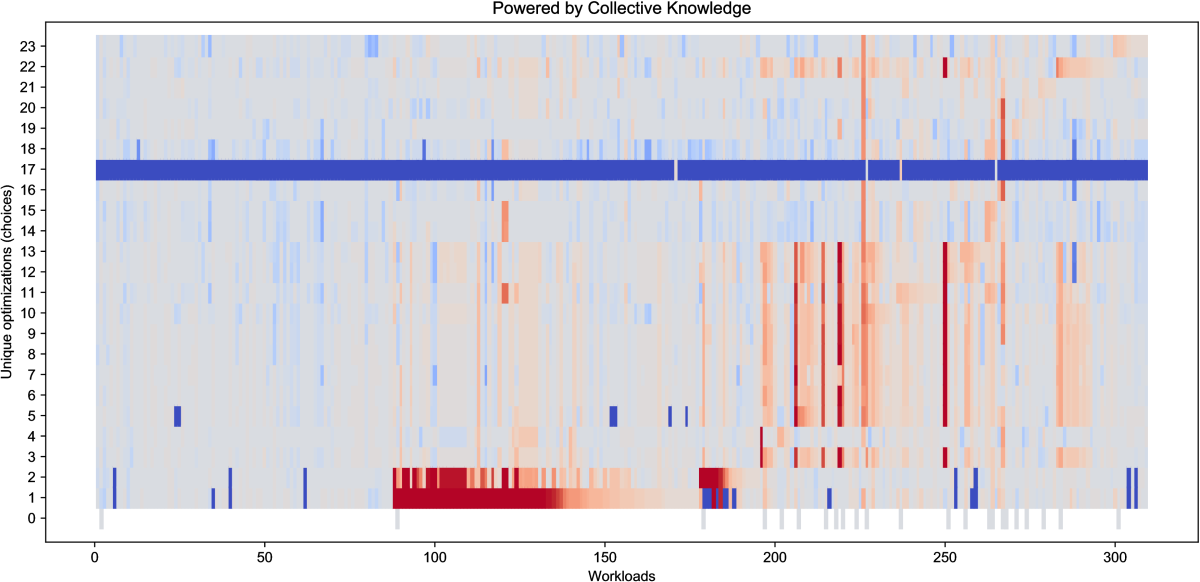

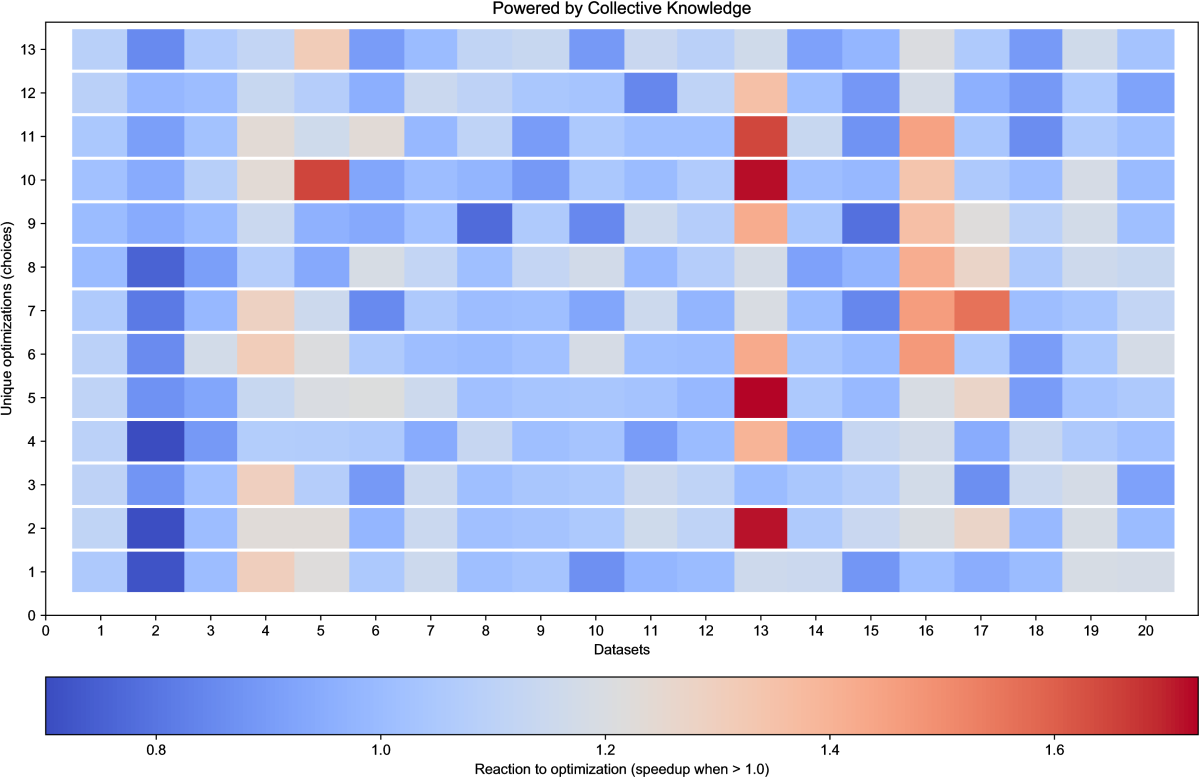

The top graph in Figure 29 shows reactions of all workloads

to the most efficient optimizations as a ratio of the default execution time (-O3)

to the execution time of applied optimization.

It confirms yet again ([6]) that there is no single "winning"

combination of optimizations and they can either considerably improve or degrade execution time

on different workloads.

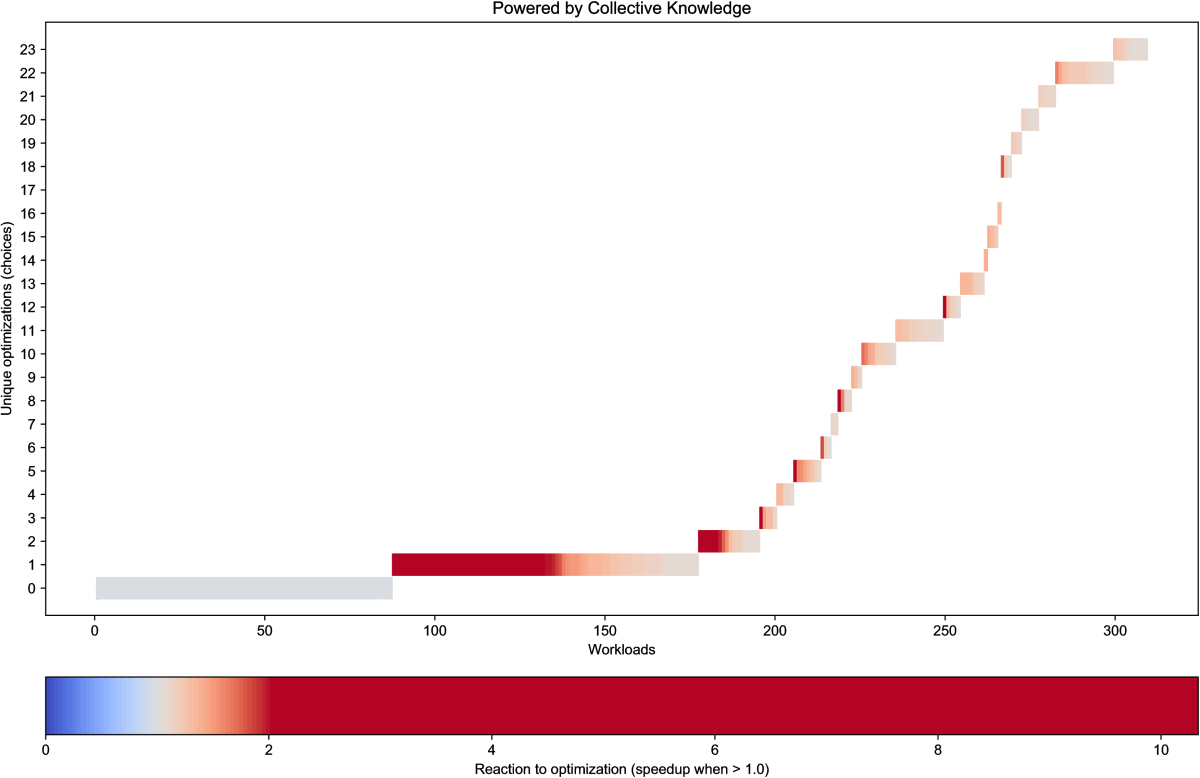

It also confirms that it is indeed possible to group together multiple workloads

which share the most efficient combination of compiler flags, i.e. which achieve

the highest speedup for a common optimization as shown in the bottom graph

in Figure 29.

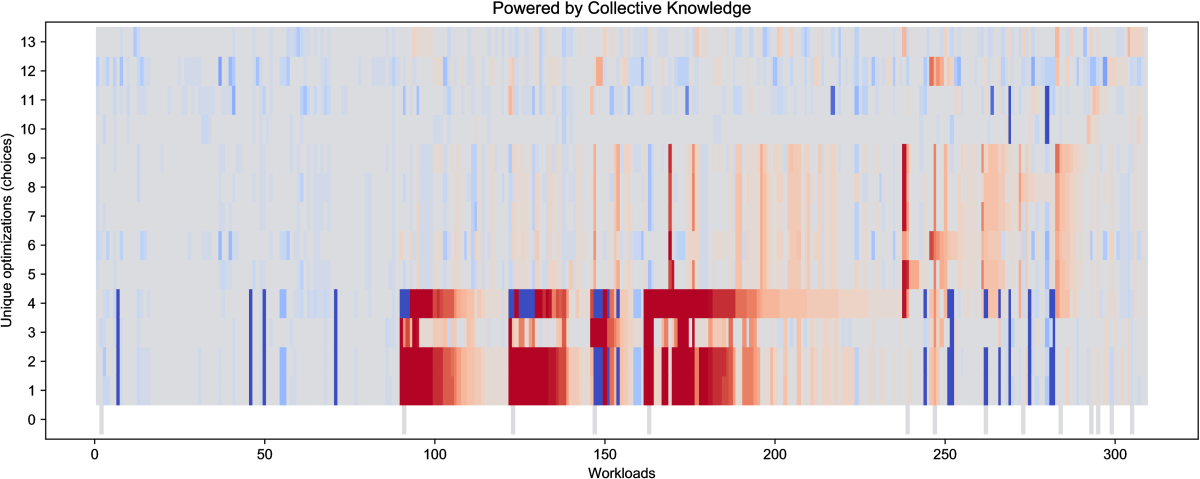

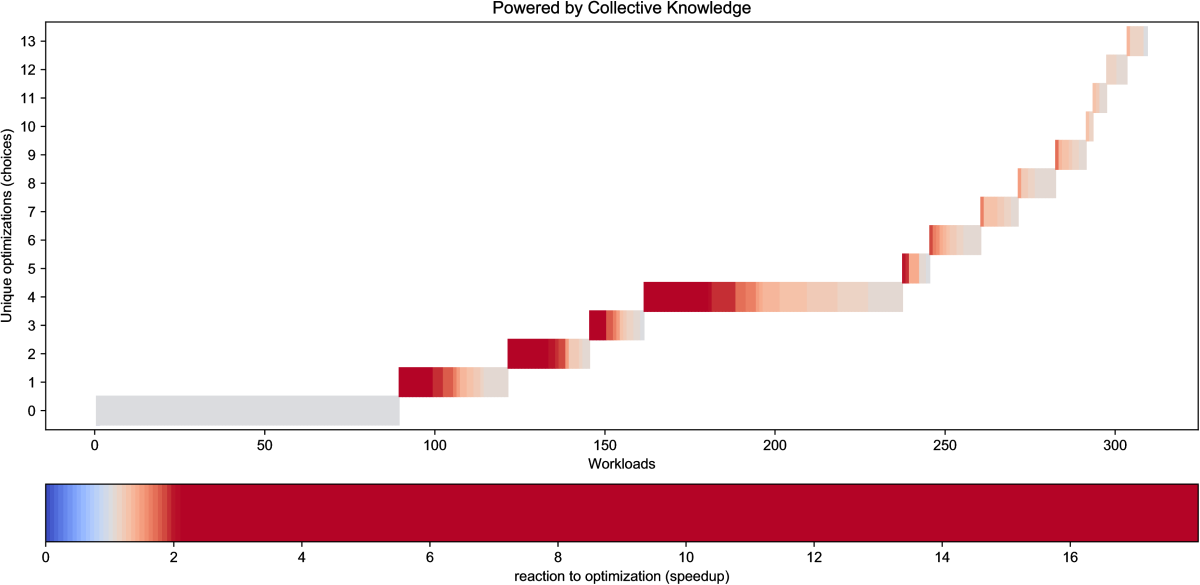

Figure 30 shows similar trends for GCC 7.1.0 on the same RPi3 device

even though the overall number of the most efficient combinations of compiler flags is smaller

than for GCC 4.9.2 likely due to considerably improved internal optimization heuristics over the past years

(see Figure 18).

Having such groups of labeled objects (where labels are the most efficient optimizations

and objects are workloads) allows us to use standard machine learning classification methodology.

One must find such a set of objects' features and a model which maximizes

correct labeling of previously unseen objects, or in our cases can correctly predict

the most efficient software optimization and hardware design for a given workload.

As example, we extracted 56 so-called MILEPOST features described in [24]

(static program properties extracted from GCC's intermediate representation)

from all shared programs, stored them in program.static.features,

and applied simple nearest neighbor classifier to above data.

We then evaluated the quality of such model (ability to predict) using prediction accuracy

during standard leave-one-out cross-validation technique: for each workload we remove it

from the training set, build a model, validate predictions, sum up all correct predictions

and divide by the total number of workloads.

Table 4 shows this prediction accuracy

of our MILEPOST model for compiler flags from GCC 4.9.2 and GCC 7.1.0

across all shared workloads on RPi3 device.

One may notice that it is nearly twice lower than in the

original MILEPOST

paper [24].

As we explain in [6],

in the MILEPOST project we could only use a dozen of similar

workloads and just a few most efficient optimizations to be

able to perform all necessary experiments within a reasonable

amount of time (6 months).

After brining the community on hoard, we could now use a much larger

collective training set with more than 300 shared, diverse

and non-synthesized workloads while analyzing much more optimizations

by crowdsourcing autotuning.

This helps obtain a more realistic limit of the MILEPOST predictor.

Though relatively low, this number can now become a

reference point to be further improved by the community.

It is similar in spirit to the ImageNet Large Scale Visual Recognition Competition

(ILSVRC) [89]

which reduced image classification error rate from 25%

in 2011 to just a few percent with the help of the community.

Furthermore, we can also keep just a few representative

workloads for each representative group as well as misclassified ones in

a public repository thus producing a minimized, realistic and

representative training set for systems researchers.

We shared all demo scripts which we used to generate data and

graphs in this section in the following CK entry (however they are not yet

user-friendly and we will continue improving documentation and

standardizing APIs of reusable CK modules with the help of the community):

Even artifact evaluation which we introduced at systems

conferences [100] to partially solve these issues is not

yet enough because our community does not have a common,

portable and customizable workflow framework.

Bridging this gap between machine learning and systems research

served as an additional motivation to develop Collective Knowledge

workflow framework.

Our idea is to help colleagues and students share various workloads,

data sets, machine learning algorithms, models and feature extractors

as plugins (CK modules) with a common API and meta description.

Plugged to a common machine learning workflow such modules

can then be applied in parallel to continuously compete

for the most accurate predictions for a given optimization scenario.

Furthermore, the community can continue improving and autotuning models,

analyzing various combination of features, experimenting with hierarchical models,

and pruning models to reduce their complexity across shared data sets

to trade off prediction accuracy, speed, size and the ease of interpretation.

For a proof-of-concept of such collaborative learning approach,

we shared a number of customizable CK modules (see ck search module:*model*)

for several popular classifiers including the nearest neighbor,

decision trees and deep learning.

These modules serve as wrappers with a common CK API for

TensorFlow, scikit-learn, R and other machine learning frameworks.

We also shared several feature extractors (see ck search module:*features*)

assembling the following groups of program features

which may influence predictions:

We then attempted to autotune various parameters

of machine learning algorithms exposed via CK API.

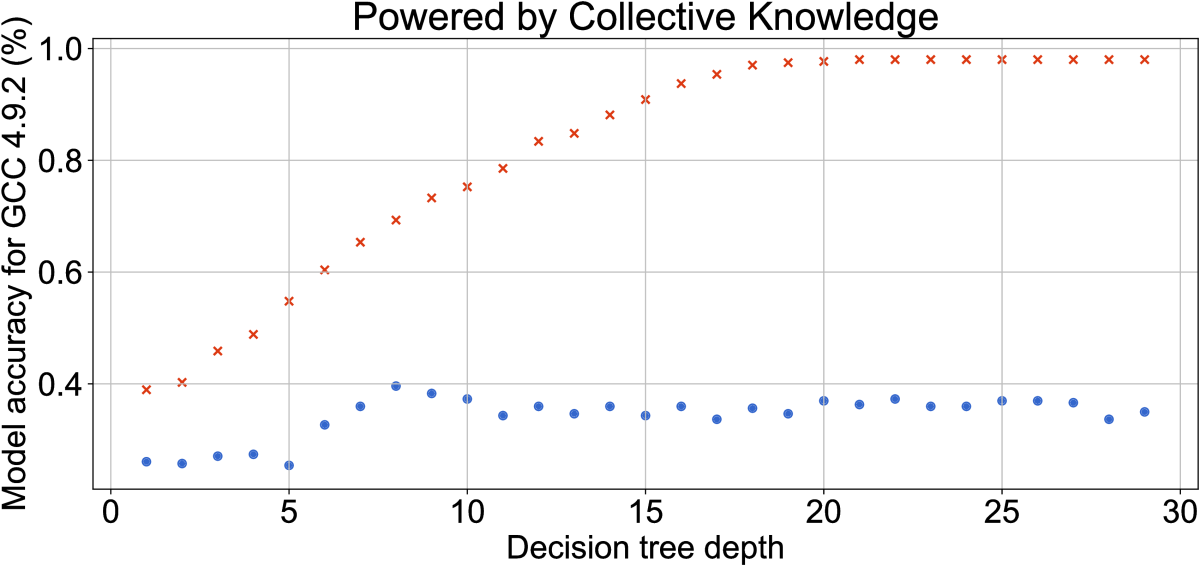

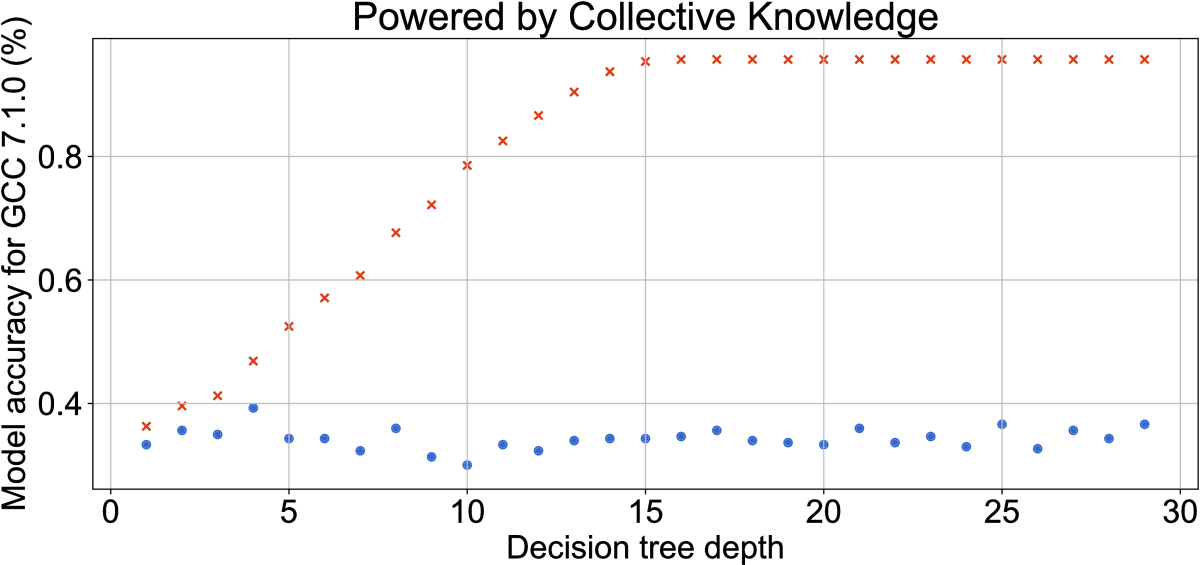

Figure 31

shows an example of autotuning the depth of a decision tree

(available as customizable CK plugin) with all shared groups of features

and its impact on prediction accuracy of compiler flags using MILEPOST

features from the previous section for GCC 4.9.2 and GCC 7.1.0

on RPi3.

Blue round dots obtained using leave-one-out validation suggest

that decision trees of depth 8 and 4 are enough

to achieve maximum prediction accuracy of 0.4% for GCC 4.9.2

and GCC 7.1.0 respectively.

Model autotuning thus helped improve prediction accuracy in comparison

with the original nearest neighbor classifier from the MILEPOST project.

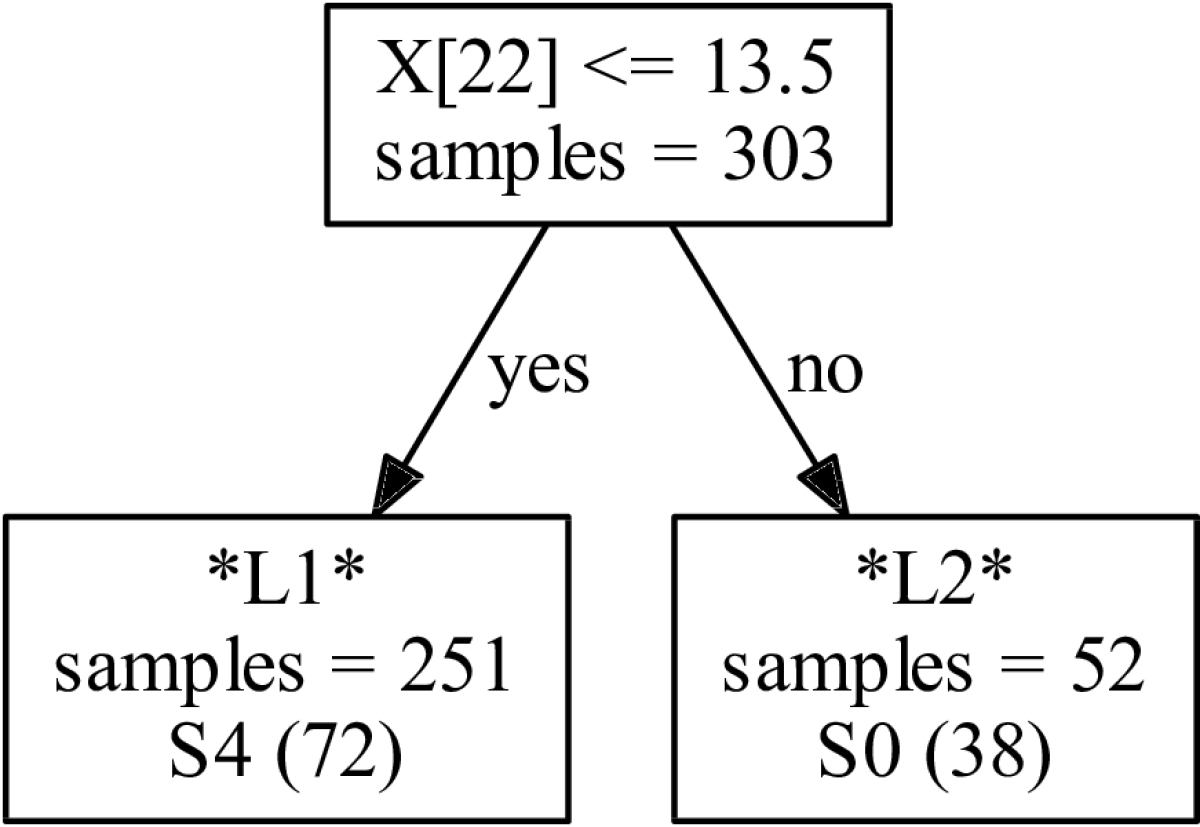

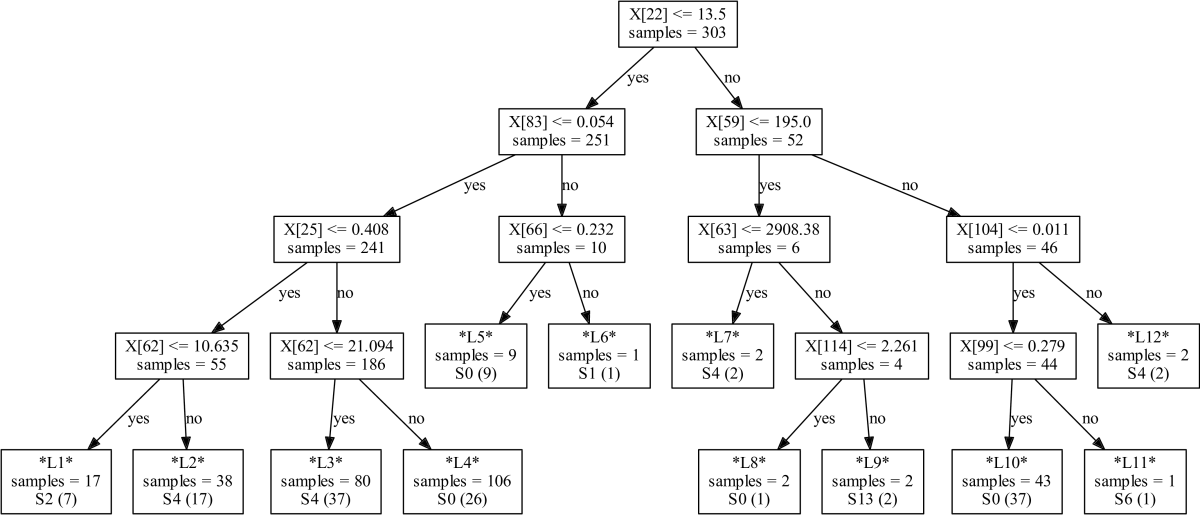

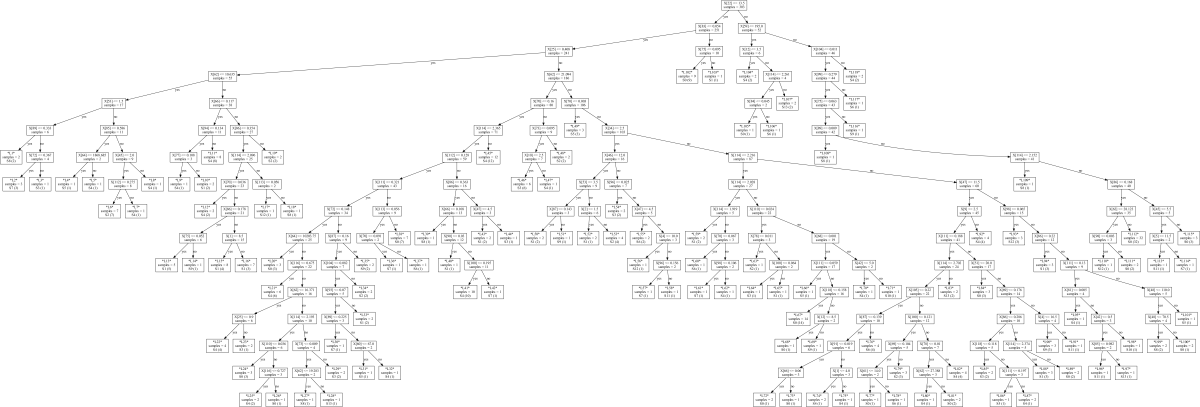

Figure 32 shows a few examples of such automatically

generated decision trees with different depths for GCC 7.1.0 using CK.

Such trees are easy to interpret and can therefore help compiler and hardware

developers quickly understand the most influential features and analyze

relationships between different features and the most efficient

optimizations.

For example, the above results suggest that the number of binary integer operations (ft22)

and the number of distinct operators (ft59) can help predict optimizations

which can considerably improve execution time of a given method over -O3.

Turning off cross-validation can also help developers understand

how well models can perform on all available workloads (in-sample data)

(red dots on Figure 31).

In our case of GCC 7.1.0, the decision tree of depth 15 shown in Figure 32)

is enough to capture all compiler optimizations for 300 available workloads.

To complete our demonstration of CK concepts for collaborative machine learning and optimization,

we also evaluated a deep learning based classifier from TensorFlow [102]

(see ck help module:model.tf)

with 4 random configurations of hidden layers ([10,20,10], [21,13,21], [11,30,18,20,13], [17])

and training steps (300..3000).

We also evaluated the nearest neighbor classifier used in the MILEPOST project but with different groups of features

and aggregated all results in Table 5.

Finally, we automatically reduced the complexity of the nearest neighbor classifier (1) by iteratively removing those features one by one

which do not degrade prediction accuracy and (2) by iteratively adding features one by one to maximize prediction accuracy.

It is interesting to note that our nearest neighbor classifier achieves

a slightly better prediction accuracy with a reduced feature set than with

a full set of features showing inequality of MILEPOST features

and overfitting.

As expected, deep learning classification achieves a better prediction accuracy of 0.68%

and 0.45% for GCC 4.9.2 and GCC 7.1.0 respectively for RPi3 among currently shared models,

features, workloads and optimizations.

However, since deep learning models are so much more computationally intensive, resource hungry

and difficult to interpret than decision trees, one must carefully balance accuracy vs speed vs size.

That is why we suggest to use hierarchical models where

high-level and coarse-grain program behavior is quickly captured

using decision trees, while all fine-grain behavior is captured

by deep learning and similar techniques.

Another possible use of deep learning can be in automatically capturing

influential features from the source code, data sets and hardware.

All scripts to generate above experiments (require further documentation)

are available in the following CK entry:

First, we converted 474 different data sets from the MiDataSet suite [103]

as pluggable CK artifacts and shared them as a zip archive ( 800MB).

It is possible to download it from the Google Drive

from https://drive.google.com/open?id=0B-wXENVfIO82OUpZdWIzckhlRk0

(we plan to move it to a permanent repository in the future)

and then install via CK as following:

All these data sets will be immediately visible to all related programs

via the CK autotuning workflow.

For example, if we now run susan corners program, CK will prompt user

a choice of 20 related images from the above data sets:

Next, we can apply all most efficient compiler optimizations

to a given program with all data sets.

Figure 33 shows such reactions

(ratio of an execution time with a given optimization to an execution time

with the default -O3 compiler optimization) of a jpeg decoder across

20 different jpeg images from the above MiDataSet on RPi3.

One can observe that the same combination of compiler flags can both

considerably improve or degrade execution time for the same program

but across different data sets.

For example, data sets 4,5,13,16 and 17 can benefit from the most

efficient combination of compiler flags found by the community

with speedups ranging from 1.2 to 1.7.

On the other hand, it's better to run all other data sets with

the default -O3 optimization level.

Unfortunately, finding data set and other features which could easily differentiate

above optimizations is often very challenging.

Even deep learning may not help if a feature is not yet exposed.

We explain this issue in [6]

when optimizing real B&W filter kernel - we managed to improve

predictions by exposing a "time of the day" feature

only via human intervention.

However, yet again, the CK concept is to bring the interdisciplinary

community on board to share such cases in a reproducible way

and then collaboratively find various features to improve predictions.

Another aspect which can influence the quality of predictive models,

is that the same combinations of compiler flags are too coarse-grain

and can make different internal optimization decisions

for different programs.

Therefore, we need to have an access to fine-grain optimizations

(inlining, tiling, unrolling, vectorization, prefetching, etc)

and related features to continue improving our models.

However, this follows our top-down optimization and modeling methodology

which we implemented in the Collective Knowledge framework.

We want first to analyze, optimize and model coarse-grain behavior of shared workloads

together with the community and students while gradually adding more workloads,

data sets, models and platforms.

Only when we reached the limit of prediction accuracy,

we start gradually exposing finer-grain optimizations

and features via extensible CK JSON interface

while avoiding explosion in design and optimization spaces

(see details in [4] for our previous

version of the workflow framework, Collective Mind).

This is much in spirit of how physicists moved from Newton's

three coarse-grain laws of motion to fine-grain quantum mechanics.

To demonstrate this approach, we shared a simple skeletonized

matrix multiply kernel from [104] in the CK format

with blocking (tiling) parameter and data set feature

(square matrix size) exposed via CK API:

We can then reuse universal autotuning (exploration) strategies

available as CK modules or implement specialized ones to explore

exposed fine-grain optimizations versus different data sets.

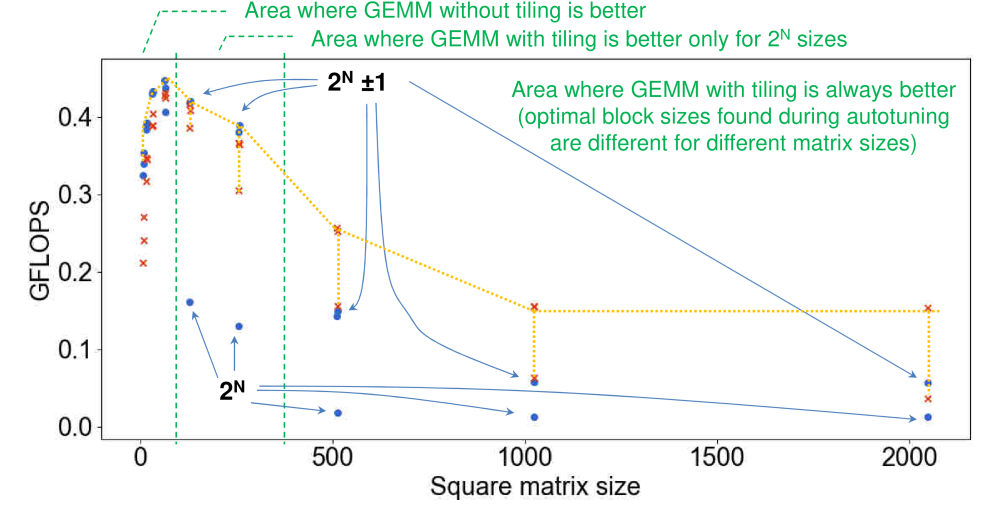

Figure 34 shows matmul performance

in GFLOPS during random exploration of a blocking parameter for different square

matrix sizes on RPi3.

These results are in line with multiple past studies showing that

unblocked matmul is more efficient for small matrix sizes (less than 32

on RPi3) since all data fits cache, or between 32 and 512 (on RPi3)

if they are not power of 2.

In contrast, the tiled matmul is better on RPi3 for matrix sizes of power of 2 between 32 and 512,

since it can help reduce cache conflict misses, and for all matrix sizes more than 512

where tiling can help optimize access to slow main memory.

Our customizable workflow can help teach students how to build efficient,

adaptive and self-optimizing libraries including BLAS, neural networks and FFT.

Such libraries are assembled from the most efficient routines

found during continuous crowd-tuning across numerous data sets and platforms,

and combined with fast and automatically generated decision trees

or other more precise classifiers [105, 106, 107, 6].

The most efficient routines are then selected at run-time

depending on data set, hardware and other features as conceptually shown

in Figure 35..

All demo scripts to generate data and graphs in this section are available in the following CK entries:

This is in spirit with existing machine learning competitions

such as Kaggle and ImageNet challenge [108, 109]

to improve prediction accuracy of various models.

The main difference is that we want to focus on

optimizing the whole software/hardware/model stack

while trading off multiple metrics including speed, accuracy,

and costs [40, 6, 110].

Experimental results from such competitions can be continuously aggregated

and presented in the live Collective Knowledge scoreboard [38].

Other academic and industrial researchers can then pay

a specific attention to the "winning" techniques close

to a Pareto frontier in a multi-dimensional

space of accuracy, execution time, power/energy consumption,

hardware/code/model footprint, monetary costs etc

thus speeding up technology transfer.

Furthermore, "winning" artifacts and workflows can now be recompiled,

reused and extended on the newer platforms with the latest

environment thus improving overall research sustainability.

For a proof-of-concept, we started helping some authors convert their

artifacts and experimental workflows to the CK format during

Artifact Evaluation [111, 55, 7].

Association for Computing Machinery (ACM) [112]

also recently joined this effort funded by the Alfred P. Sloan Foundation

to convert already published experimental workflows

and artifacs from the ACM Digital Library

to the CK format [113].

We can then reuse CK functionality to crowdsource benchmarking and multi-objective

autotuning of shared workloads across diverse data sets, models and platforms.

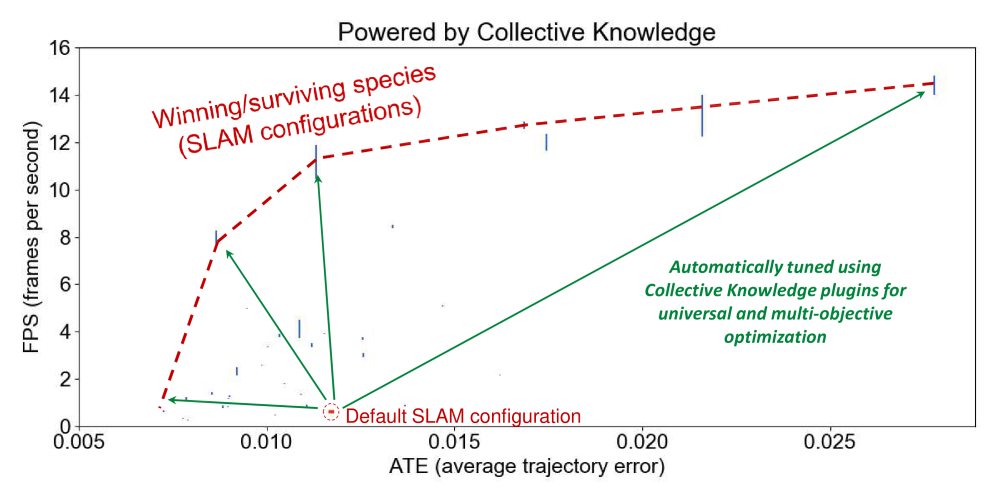

For example, Figure 36 shows

results from random exploration of various SLAM algorithms (Simultaneous localization and mapping)

and their parameters from [114] in terms of accuracy (average trajectory error or ATE)

versus speed (frames per second) on RPi3 using CK [115].

Researchers may easily spend 50% of their time developing

experimental, benchmarking and autotuning infrastructure

in such complex projects, and then continuously

updating it to adapt to ever changing software and hardware

instead of innovating.

Worse, such ad-hoc infrastructure may not even survive

the end of the project or if leading developers leave project.

Using common and portable workflow framework can relieve researchers

from this burden and let them reuse already existing artifacts and

focus on innovation rather than re-developing ad-hoc software from scratch.

Other researchers can also pick up the winning designs on a Pareto frontier,

reproduce results via CK, try them on different platforms

and with different data sets, build upon them,

and eventually try to develop more efficient algorithms.

Finally, researchers can implement a common experimental methodology

to evaluate empirical results in systems research similar to physics

within a common workflow framework rather than writing their own

ad-hoc scripts.

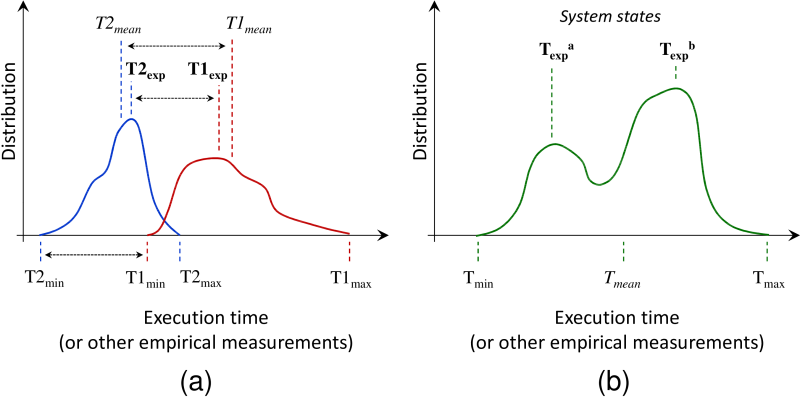

Figure 37 shows statistical analysis

of experimental results implemented in the CK to compare different

optimizations depending on research scenarios.

For example, we report minimal execution time from multiple experiments

to understand the limits of a given architecture, expected value to see

how a given workload performs on average, and max time to detect

abnormal behavior.

If more than one expected value is detected, it usually means

that system was in several different run-time states during experiments

(often related to adaptive changes in CPU and GPU frequency due to DVFS)

and extra analysis is required.

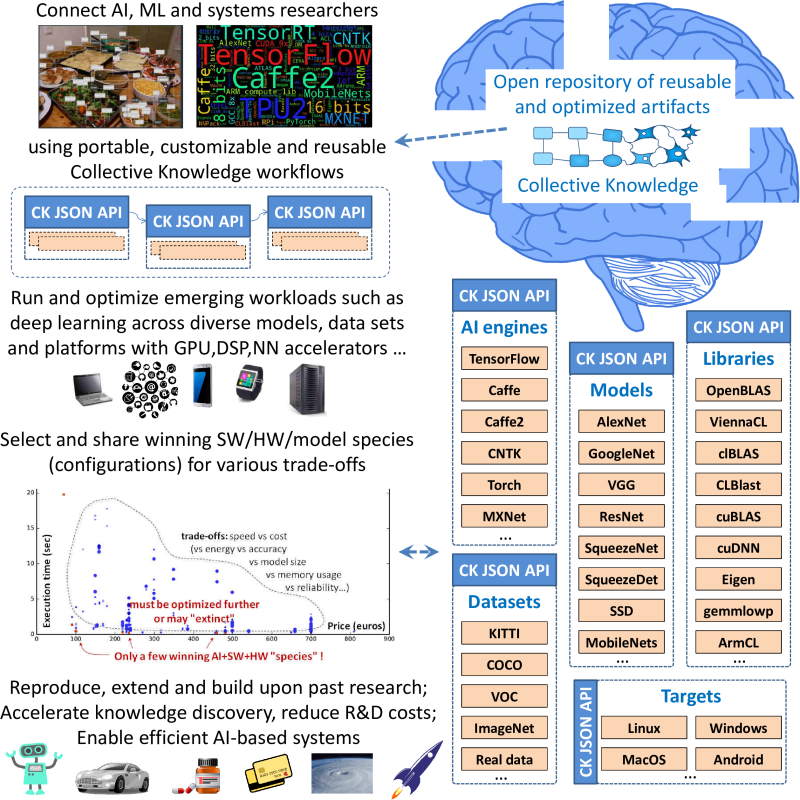

We now plan to validate our Collective Knowledge approach

in the 1st reproducible ReQuEST tournament

at the ACM ASPLOS'18 conference [40]

as presented in Figure 38.

ReQuEST is aimed at providing a scalable tournament framework,

a common experimental methodology and an open repository for continuous evaluation

and optimization of the quality vs. efficiency Pareto optimality of a wide range

of real-world applications, libraries, and models across the whole

hardware/software stack on complete platforms.

ReQuEST also promote reproducibility of experimental results and reusability/customization

of systems research artifacts by standardizing evaluation methodologies and facilitating

the deployment of efficient solutions on heterogeneous platforms.

ReQuEST will use CK and our artifact evaluation methodology [7]

to provide unified evaluation and a live scoreboard of submissions.

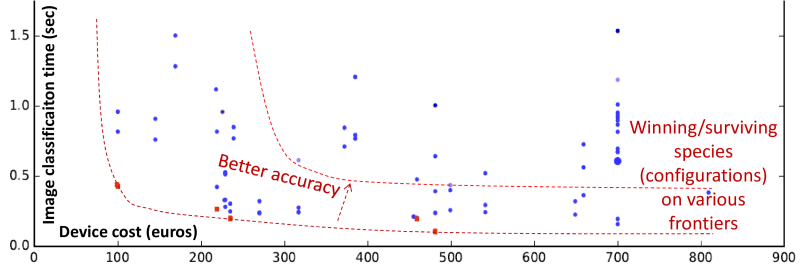

Figure 39 shows a proof-of-concept example of such a

scoreboard powered by CK to collaboratively benchmark inference (speed vs. platform cost)

across diverse deep learning frameworks (TensorFlow, Caffe, MXNet, etc.),

models (AlexNet, GoogleNet, SqueezeNet, ResNet, etc.), real user data sets, and mobile devices

provided by volunteers (see the latest results at cKnowledge.org/repo).

Our goal is to teach students and researchers how to

The major issues including raising complexity, lack of a common experimental framework

and lack of practical knowledge exchange between academia and industry.

Rather than innovating, researchers have to spend more and more time

writing their own, ad-hoc and not easily customizable support tools

to perform experiments such as multi-objective autotuning.

We presented our long-term educational initiative to teach

students and researchers how to solve the above problems

using customizable workflow frameworks similar to other sciences.

We showed how to convert ad-hoc, multi-objective

and multi-dimensional autotuning into a portable and customizable workflow

based on open-source Collective Knowledge workflow framework.

We then demonstrated how to use it to implement various scenarios

such as compiler flag autotuning of benchmarks and realistic workloads

across Raspberry Pi 3 devices in terms of speed and size.

We also demonstrated how to crowdsource such autotuning across different

devices provided by volunteers similar to SETI@home, collect the most efficient optimizations

in a reproducible way in a public repository of knowledge at cKnowledge.org/repo,

apply various machine learning techniques including decision trees, the nearest neighbor classifier

and deep learning to predict the most efficient optimizations for previously

unseen workloads, and then continue improving models and features

as a community effort.

We now plan to develop an open web platform together with the community

to provide a user-friendly front-end to all presented workflows

while hiding all complexity.

We use our methodology and open-source CK workflow framework and repository

to teach students how to exchange their research artifacts and results

as reusable components with a a unified API and meta-information,

perform collaborative experiments, automate Artifact Evaluation

at journals and conferences [7], build upon each others' work,

make their research more reproducible and sustainable,

and eventually accelerate transfer of their ideas to industry.

Students and researchers can later use such skills and unified artifacts

to participate in our open ReQuEST tournaments on reproducible and Pareto-efficient

co-design of the whole software and hardware stack for emerging workloads

such as deep learning and quantum computing in terms of

speed, accuracy, energy and costs [40].

This, in turn, should help the community build an open repository of

portable, reusable and customizable algorithms continuously optimized

across diverse platforms, models and data sets

to assemble efficient computer systems

and accelerate innovation.

We would like to thank Raspberry Pi foundation for initial financial support.

We are also grateful to Flavio Vella, Marco Cianfriglia, Nikolay Chunosov, Daniil Efremov, Yuriy Kashnikov,

Peter Green, Thierry Moreau, ReQuEST colleagues, and the Collective Knowledge community

for evaluating Collective Knowledge concepts and providing useful feedback.

Submission guidelines: This is an example of an Artifact Appendix which we introduced

at the computer systems conferences including CGO, PPoPP, PACT and SuperComputing

to gradually unify artifact evaluation, sharing and reuse [7, 8, 9, 5].

We briefly describe how to install and use our autotuning workflow, visualize optimization results and reproduce them.

We also shared all scripts which we used to generate data and